The problem

We have a lot of products at ASOS. Like, a lot. All of these products have various properties assigned to them such as sizing, images, a description etc.

The property I want to talk about today is price. Compared to the product itself, which is a static thing that is produced and might be in stock, or not, the price will change if the product goes on sale.

ASOS

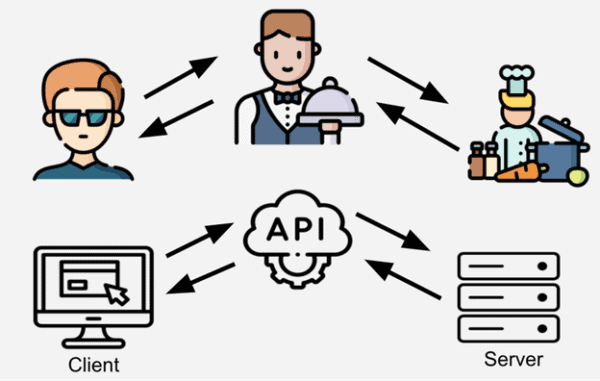

However, it wouldn’t be efficient for our system to retrieve the current price from the database every time a customer performs an action. There are a lot of products and a lot of people loving ASOS, so something like that would simply not scale well.

In our system, those prices are stored in Cosmos DB. Cosmos DB is Microsoft’s globally distributed, multi-model NoSQL database service. Even though Cosmos DB is really fast, we’re trying to give our customers the best possible experience they can have. This means we need to be even faster. For that reason, even though all of our prices are stored in Cosmos DB, we also need them to be in a distributed in-memory cache.

Redis Cache to the rescue.

What I wanted to show you in this blog is how we can load and synchronise our persistent storage database into a Redis Cache.

This question can have multiple answers, but the solution I chose to go with is Azure Functions.

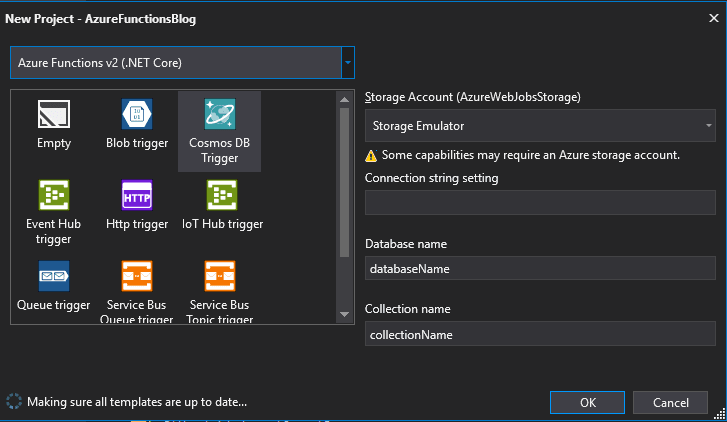

Cosmos DB trigger

Azure functions support multiple types of trigger-based operations. One of them is the Cosmos DB trigger. The Cosmos DB trigger is (well…) triggered, whenever a document is added or changed.

Azure Functions

This means that whenever a price is added or changed in some way we will get the new or updated version of the price document in our Cosmos DB Azure Function trigger method.

The out-of-the-box code is pretty easy to understand.

The Document object is an object coming from Cosmos DB, which represents the new or updated document in its entirety. What’s really cool is that if a document is updated multiple times between two trigger calls, you’ll only get the latest version of this document in the trigger, which means that you always deal with the latest, up-to-date version of a document.

The LeaseCollectionName property in the CosmosDBTrigger attribute is the name of the Cosmos DB collection that the function will use in order to keep track of how many changes have occurred and where it should start reading from, in case of a failure or a restart. You’ll need to create this collection yourself, but you can also set the CreateLeaseCollectionIfNotExists value to true. It doesn’t need to be partitioned and it can be provisioned with the minimum 400 RU/s. You don’t need to do anything manual with this collection. The Azure function will do the rest for you. The same lease collection can host multiple Azure functions. All you need to do is to add a LeaseCollectionPrefix in your CosmosDBTrigger. That way, the Azure function will be able to identify which lease collection documents it’s the owner of.

The CosmosConnectionString property in the CosmosDBTrigger attribute is the name of the property which contains the Cosmos DB connection string in our settings.json file.

Now that we have the trigger set up, let’s move on to the next thing, saving it in the Redis Cache.

Saving in Azure Redis Cache

Setting values in Azure Redis Cache is really simple by design but what I want to emphasise is how simple the whole process becomes when using the Azure Function.

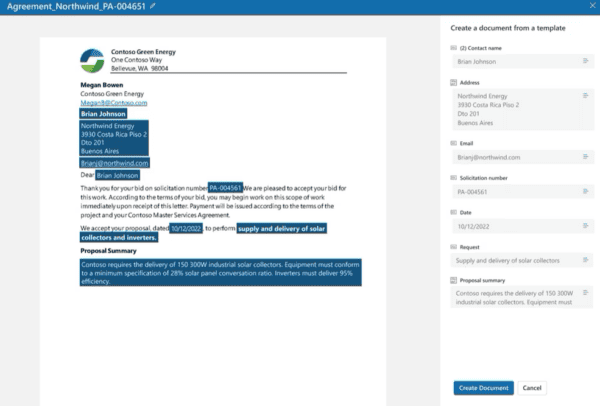

You’re getting all the added or changed documents in a time window, and you don’t need to check anything. You can call the ToString() method at the document level, which returns the string representation of the json document and do a SetString in order to upsert the value. There’s no need to check if it exists in the Redis Cache or not. This is only true if your Cosmos DB data transfer object (DTO) is the same one that your clients will use to consume the Redis Cache data. This is a bad practice in general and there should be mapping taking place — to map the Cosmos DB DTO to a Redis DTO, but for simplicity purposes I will be using the same object.

Now if we upload the Azure function and add the following document in Cosmos DB…

…then in a matter of seconds we can check the Redis Cache and the price document is already serialised and stored.

Now it’s up to our consumers to call the Redis Cache, retrieve the object and deserialise it in order to use it.

Bonus round — filling up the Redis Cache from scratch

What if your cache gets corrupted? How can you load everything back into the cache?

The answer is actually quite simple.

You can delete the documents for this Azure function from the leasescollection and add the StartFromBeginning = true property at the CosmosDBTrigger attribute of the function. That way, the documents will be recreated with a null continuation token, which will force the process to start from the beginning.

About the Author:

Nick is a Software Engineer at ASOS.com and a Microsoft MVP for the Data Platform. When he’s not coding, you’ll find him blogging at https://chapsas.com or managing his open-source projects on GitHub.

Reference: