Model Context Protocol (MCP) Overview

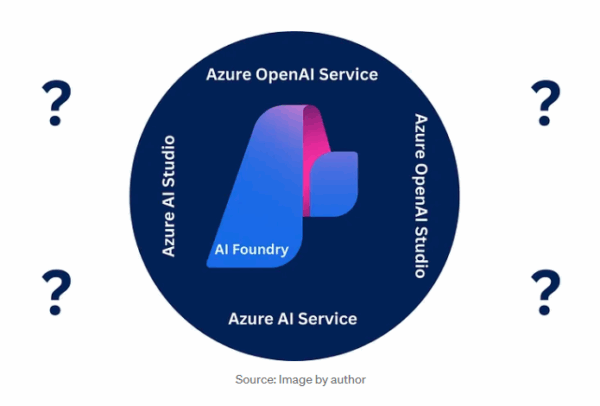

As AI ecosystems become increasingly complex, seamless interoperability and effective context-sharing among models, agents, and tools are essential. The Model Context Protocol (MCP) serves as a kind of “USB-C port” for AI, providing standardized, secure access to data, capabilities, and workflows across different systems. I won’t delve into the foundational details of MCP here, as I’ve already covered them in a previous in-depth blog post.

🔗 For foundational insights, see the prior blog: Unlocking the Future of Agentic AI with Model Context Protocol (MCP)

🎯 Objective

In this blog, I’ll demonstrate—through a hands-on example—how Azure AI Foundry Agents can be exposed as an MCP server. You’ll learn how to embed Azure AI Foundry’s agent runtime into a Model Context Protocol (MCP) interface, enabling external MCP-compliant clients to discover, authenticate, and invoke agent capabilities on demand. This transforms your Azure agents into dynamic, interoperable service providers—akin to turning your Foundry-powered agents into an MCP-powered marketplace for next-generation, agentic workflows.

🛠️ Foundry Agents as MCP Server

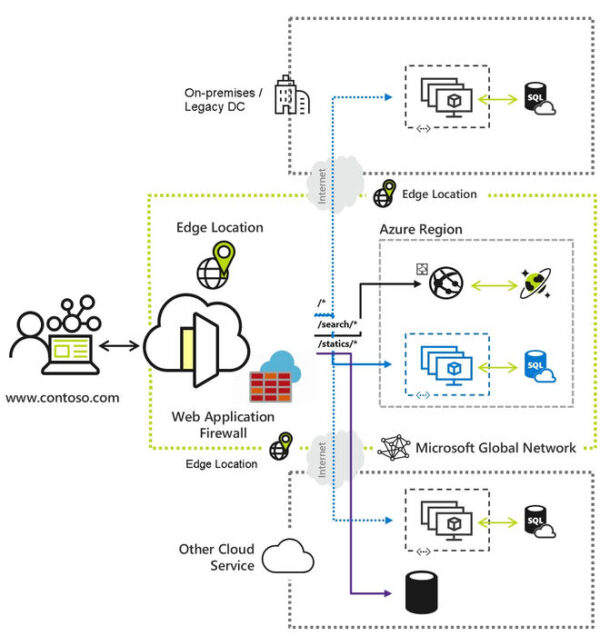

Azure AI Foundry Agents can be deployed as fully featured MCP servers, allowing for seamless interoperability with other agents, cloud vendors, and even MCP-compliant clients—such as Claude Desktop or GitHub Copilot. By providing an MCP-standard endpoint around the Azure AI Foundry Agent runtimes, each agent’s capabilities—such as model selection, Bing-powered grounding, Azure AI Search, and custom tools—are exposed via JSON-RPC. This enables Azure AI Foundry Agents to be easily discovered and integrated into multi-agent workflows. As a result, your agents become dynamic, on-demand service providers within the broader MCP ecosystem.

Architecture –

🧩 Implementation

I have adopted the Azure‑Samples/remote‑mcp‑apim‑functions‑python repository as the foundational baseline for integrating Azure AI Foundry Agents via MCP.

Why This Baseline?

- Secure & Scalable MCP Server on Azure – This provides a secure and scalable MCP server implementation on Azure—serving as a foundational template for later integrating Azure AI Foundry Agents.

- Seamless Integration of Azure Infrastructure – It combines API Management, serverless Functions, and managed security to fully implement the MCP authorization and messaging standard.

- Extensibility for Foundry Agents Integration – You can begin with a functional MCP endpoint capable of handling tool discovery and execution, and then easily expand its capabilities by integrating Azure AI Foundry Agents for enhanced AI functionality.

⚙️ Steps

Deploy the Baseline MCP Server

As mentioned above, you can use the Azure‑Samples/ remote‑mcp‑apim‑functions‑python repository as your foundation.

Create Azure AI Foundry Agents

Before integrating with Azure Functions, you’ll first need to build your Azure AI Foundry Agents—either through the Foundry Portal or by using the Foundry SDK.

In this example, I’ve created two Foundry Agents:

- StockMarketAnalyzer – This agent leverages the Bing Search API to gather and analyze stock market data from the web. It synthesizes information from multiple sources to generate intelligent investment recommendations. You can also create this agent programmatically. For reference, see the GitHub repository linked below.

GitHub – Hapstanny/FoundryAgents/StockMarketAnalyzer-Agent.py

- AI‑State‑Readiness – This agent leverages Azure AI Search to access and analyze organizational data related to AI readiness. It supports the evaluation of AI adoption trends, employee experience, and digital transformation initiatives throughout the enterprise.

These agents serve as the primary services that will later be made accessible via the MCP server. You can find their implementation details in my GitHub repository – Hapstanny/FoundryAgent-as-MCPServer

Integrate Agents into Azure Functions

To make your Foundry Agents available as MCP tools, update the Azure Function App by extending the mcpToolTrigger handlers to call agents through the Foundry Agent Service using the Foundry Agent SDK. Once integrated, each Foundry Agent functions as an MCP-compatible tool, able to respond to client requests through the MCP server.

This interaction is illustrated in the screenshot below.

Sample code –

Detailed code implementation details can be found in my GitHub repository –

GitHub – Hapstanny/src/function_app.py

Expose as MCP Endpoint

Leverage the existing baseline Bicep infrastructure scripts to deploy your updated Azure Function code. This process will automatically publish and configure the service endpoint as part of the deployment.

Make sure each mcpToolTrigger is properly registered and exposed through the existing MCP server, which is surfaced via Azure Functions and API Management. This enables your Azure AI Foundry Agents to be discoverable and callable by any MCP-compliant client

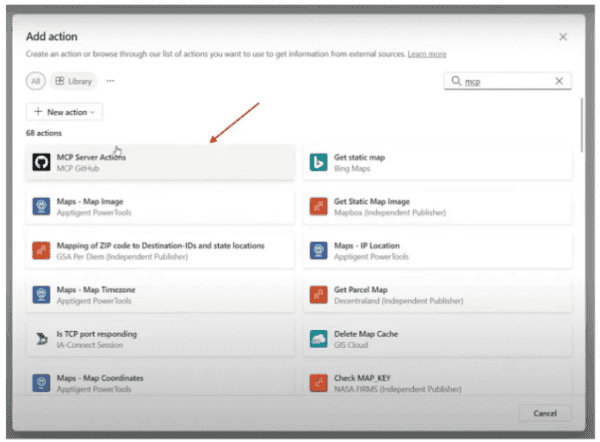

Invoke via MCP-Compatible Clients

Once deployed, you can validate your MCP endpoint using compatible client tools such as Claude Desktop or GitHub Copilot Agent. Connect to the SSE endpoint (e.g., /mcp/sse) using the configuration parameters shown below.

🔧 MCP Client Configuration

Example – It invokes the Azure AI Foundry Agent—previously defined within the Azure Function—when responding to a user query.

References

Below are a few references to help you integrate Azure AI Foundry Agents as MCP Server.

Baseline Azure Implementation – Azure‑Samples/ remote‑mcp‑apim‑functions‑python

Azure AI Function Implementation – Hapstanny/function_app.py

Azure AI Foundry Agent Creation – Hapstanny/StockMarketAnalyzer-Agent.py

🏁 Conclusion: Bringing It All Together

The Model Context Protocol (MCP) presents a powerful standard for connecting intelligent agents, tools, and systems in a unified and secure manner. By embedding Azure AI Foundry Agents into an MCP server architecture, we unlock seamless interoperability with other agents and client tools.

This blog walked through how to:

- Deploy a secure MCP baseline using Azure Functions & API Management

- Create Foundry Agents with real-world capabilities like web-based stock analysis and enterprise AI readiness evaluation

- Integrate these agents into Azure Functions as mcpToolTrigger handlers

- Expose them via a standardized MCP endpoint

- Invoke them using live MCP-compatible clients

This architecture sets the stage for scalable, production-ready agentic solutions — allowing your AI agents to function as secure, discoverable, on-demand services in the broader ecosystem.

About the Author

Hemant Taneja

Sr. Cloud Solution Architect at Microsoft | Data Analytics | AI & ML | Inquisitive Learner

Reference:

Taneja, H (2025). Transforming Azure AI Foundry Agents into MCP-Compliant Services. Available at: (3) Transforming Azure AI Foundry Agents into MCP-Compliant Services | LinkedIn [Accessed: 7th August 2025].