This article will provide an in-depth look into the new features of Copilot Studio and how they may change the way we build agents. Additionally, it will discuss the potential business impact of these new features on enterprise business process transformation. This article will be developed gradually over time, so please continue to follow this space for more updates about Copilot Studio.

Table of Contents

- How Planner, Plan with Generative Orchestration Will Completely Transform Agent Development

- Autonomous Agents to Reimagine Enterprise Business Processes and Human-AI Collaboration

How Planner, Plan with Generative Orchestration Will Completely Transform Agent Development

I must admit that Copilot Studio was not a primary focus for me initially. While I did explore extending Microsoft 365 Copilot using various Copilot Studio actions, I did not pay significant attention to building low-code custom engine agents with Copilot Studio at first.

But if you have been keeping up with recent announcements from Microsoft and observing the broader market trends, it is clear that we are entering a new era of AI transformation. This advancement extends beyond personal/team productivity, moving more towards end-to-end AI enterprise business process transformation.

And it looks like Copilot Studio is at the center of this enterprise business process transformation.

How it started, how it’s going!

If you are following the history of Copilot Studio you will find that we started with Power Virtual Agents (PVAs), then Copilot Studio with Topics as the center of the conversation experience (Classic Orchestration).

With classic orchestration, an agent responds to users by activating the topic with trigger phrases that closely match the user’s query. Upon landing on a topic, the agent can retrieve knowledge or execute actions before responding back to the user.

However, the industry has evolved, and LLMs have transformed the field of Conversational AI and agent development towards agents with reasoning and planning capabilities.

Copilot Studio has also adopted this new approach by introducing the “Generative Orchestration” mode for agent development.

❗Currently, the generative orchestration is still in preview, with more information about the license and cost expected at the GA timeframe.

First, let’s explain agent planning.

What is agent planning?

Agent planning is about creating a detailed plan to achieve a goal, breaking it down into smaller tasks, and executing those tasks in a structured manner to achieve the goal requested by user.

One architecture of agent planning is the ReWOO (Reasoning Without Observations) agent, which allows tasks to depend on previous task results by permitting variable assignment in the planner’s output. This design can execute a task list without needing to re-plan every time, making it more efficient.

Source: https://blog.langchain.dev/planning-agents/

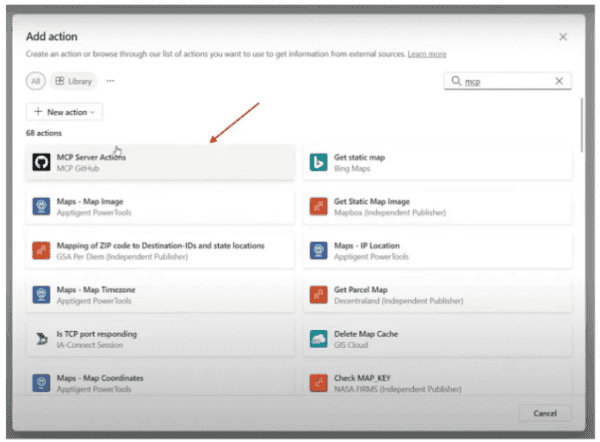

A key component of the plan is agent tools. These tools enable agents that use large language models (LLMs) to interact with the outside world. They provide an interface for LLMs to access and retrieve information from external sources. To ensure the tool is callable, it is essential to provide a detailed description of the tool, including its input and output parameters.

Source: https://python.langchain.com/docs/concepts/tool_calling/

Now, let’s discuss Copilot Studio generative orchestration, and how it relates to what we discussed so far.

Copilot Studio Planner, Plan with Generative Orchestration

According to Microsoft documentation: “With generative orchestration, the planner first creates a plan to answer the user query. It then tries to execute the plan by filling in required inputs from the conversation, following up with the user for any missing or ambiguous details. The system checks that it found an answer to the user’s question before replying to the user. If not, it goes through the process again. Finally, the system generates a response based on the output of the plan”.

Looking closely to the different Copilot Studio components we can say that the following are the key components of the plan:

- Agent Instructions

- The last 10 turns of conversation history

- Knowledge Sources (Agent RAG tool)

- Topics (Agent tool with inputs/outputs)

- Actions (Agent tool with inputs/outputs)

❗Important notes about the plan (Generative orchestration):

- For all knowledge sources, Copilot Studio selects the top four knowledge sources (based on the description given to the knowledge source), regardless of type. Those four knowledge sources are searched, in addition to all of the uploaded files.

- In general, there is a limit of 1000 topics per agent in Dataverse environments. However, high properly there is a prefiltering step that may occur before generation the plan to comply with the LLM limits, such as context size.

- Similar to topics high properly there is a prefiltering step for actions also.

- It is not clear or documented at this stage whether the global scope variables are considered when generating the plan or not.

- Topic inputs and outputs can share values with other topics and actions in the same generated plan (Referred to as dynamic changing in the context of Copilot Studio).

- Topics and actions don’t yet support custom entities as input parameters.

- When agent searches knowledge sources in generative mode, it no longer uses the Conversational boosting system topic.

If we can assume that knowledge Sources, Topics and Actions are all agent tools, then providing high-quality names and descriptions is critical for a successful plan generation and the prefiltering step when applicable.

The importance of high-quality names and descriptions and the necessity of having a domain business expert on your agent-building team

It’s important to provide a high-quality name/description for each topic (and inputs/outputs), action (and inputs/outputs), and knowledge source. Good descriptions ensure the agent selects the right topics, actions, and knowledge sources to respond to users.

High-quality descriptions are now essential and should be considered a cornerstone of your agent development activities. While copilot studio developers/architects might create these, having a domain business expert on your team ensures the descriptions align with the queries business users will use when interacting with your agent.

Equip your agents with the minimal tools (knowledge sources, topics, actions) to complete the task

Generative orchestration is not always completely accurate. Actions might not always work as intended. Errors might occur when preparing the input for the action/topic or when generating a response based on the action’s output. Your agent might also call the wrong knowledge source/topic/action for the user query.

To reduce the risk of errors when using generative orchestration, beside the recommendation to maintain high-quality names and descriptions. Additionally, it is advisable to limit the enabled knowledge sources, topics, and actions to only those necessary for the agent to complete its tasks to avoid errors and LLM hallucination while generating the plan.

Generative slot filling for Topics/Actions Input Parameters

If your agent uses generative orchestration, it can automatically fill inputs, before running the topic/action, by using conversation context or generate questions to ask the user for the values.

By default, the planner uses the name and description of input parameters to automatically generate questions to prompt the user for any missing information. However, you can override this behavior and author your own question.

What about the authored conversation path?

As discussed in the generative orchestration mode, the agent automatically generates a response, using the available information from topics, actions, and knowledge that it has called but sometimes you still need to have control over the response, so what you can do?

In general, at the topic level if you configured the output of your topic as an output variable then you are giving the control to the agent (LLM) to generate contextual response to your users.

If you need to have a full control over the answer, then you will need to define the output as a message/question node within the topic.

What about Copilot Studio and Multi-Agent Orchestration?

Although the roadmap does not currently provide information about Copilot Studio and the Multi-Agent Orchestration feature (based on our current knowledge), I believe we have a significant opportunity with the generative orchestration mode.

Using the generative orchestration mode, I think we can have a potential implementation (a feature request) using what I call virtual agents within Copilot Studio Agent

Copilot Studio Virtual Agents

In my POV using the generative orchestration we can have a new concept within the Copilot Studio agent called “Virtual Agent” where you will need to define a definition for this agent with instructions, enable only the knowledge sources, topics, and actions need for this virtual agent.

Then a virtual orchestrator agent or internal planner will be responsible about the different virtual agent’s orchestration and generating the final response for a user prompt/query.

❗Based on some Ignite 2024 demos we may see this multi-agent orchestration coming to Microsoft 365 Copilot – BizChat and declarative agents. I hope something similar will be available for Copilot Studio.

Copilot Studio Generative Orchestration: Implications for Enterprise Business Process Transformation

This new mode of building agents presents a significant business opportunity. It eliminates the need to design our agent’s conversation experiences based on predefined topics and attempt to anticipate every possible conversation path from the start. Instead, we can focus on more dynamic and flexible approaches for our agents.

With generative orchestration you will need to think about your agent use case or business process whether it’s: Retrieval (Simple chat agent), Task (Business process agent that can take actions) or Autonomous (Agent that run autonomously to complete a complex business process). Then, determine the tools (knowledge sources, topics, and actions) necessary for your agent to perform its job successfully and define them accordingly.

It is not necessary to consider every possible conversation path from the beginning anymore. Instead, the agent will leverage its generative/LLM power to analyze user requests and the available tools to build and execute the optimal plan to fulfill the user request.

This results in reduced time for developing flexible agents capable of managing diverse use cases. Consequently, this will result in increased Return on Investment (ROI) and enhanced customer satisfaction (CSAT).

Autonomous Agents to Reimagine Enterprise Business Processes and Human-AI Collaboration

Copilot Studio autonomous agents are here to transform business processes by executing and orchestrating tasks on behalf of individuals, teams, or functions. They will enhance productivity and efficiency, allowing businesses to scale operations and improve customer experiences.

But before we deep dive into Copilot Studio autonomous agents, let’s define what autonomous agents are in general.

What are autonomous agents?

According to Microsoft, ServiceNow, Salesforce, and other major marker players:

Autonomous agents are sophisticated AI systems designed to perform tasks with minimal/without human intervention. Unlike traditional automation that follows predefined rules, these agents can operate in environments, making decisions and completing complex tasks independently. They leverage large language models (LLMs) to chain multiple thoughts together, use various tools and external sources for information, and have the ability to utilize memory from past interactions to enhance their effectiveness. By combining decision-making capabilities, memory, and tools, autonomous agents can autonomously achieve specific objectives or goals, significantly improving productivity and efficiency in various applications.

Copilot Studio agents vs autonomous agents

The major difference between autonomous agents and standard agents is that autonomous agents can act autonomously based on event triggers, whereas standard agents always require initial input from a user. Event triggers enable autonomous agents to respond automatically when a specified event occurs.

❗Important Notes:

- It is important to understand that Copilot Studio generative orchestration mode is a prerequisite for developing Autonomous Agents.

- Currently, the autonomous agents still in preview, with more information about the license and cost expected at the GA timeframe.

What are agent triggers, trigger payload & instructions, and what information are we missing from Microsoft regarding these?

Event triggers generate a trigger payload and send it to the agent with instructions via a connector. The payload includes information about the event. Upon receiving the payload, the agent follows the instructions provided by the agent author and the instructions sent through the trigger payload.

Under the hood, agent trigger is a Power Automate cloud flow with the chosen trigger (Power Automate trigger) and the “Sends a prompt to the specified copilot for processing” action (Power Automate action). After creating the trigger in Copilot Studio, you can modify the auto-generated flow in Power Automate.

Trigger payload & instructions

The trigger payload is a JSON or plain text message containing event information. It is sent to your agent as a message. You can use the default payload contents or add your own trigger instructions. Later, these contents can be modified in Power Automate.

Agent instructions versus trigger (payload) instructions

Agent instructions define the agent’s objective, behavior, and any other rules it should follow. These instructions are also used during the planning step to create the agent plan for completing specific requests.

However, agent instructions might not work best for all situations. If your agent has multiple triggers or multiple complex goals, you should use instructions in the trigger payload instead. These trigger (payload) instructions are specific to respond to specific event.

What information are we missing from Microsoft regarding these different instruction types?

While some may argue we have all the necessary information, I believe that for complex agent use cases, it is very important to understand how the Copilot studio planner integrates these different instructions together, and in which order to generate the overall agent instructions for plan generation and execution.

Also, it is likely that there is a hidden background system prompt being injected. While it is not necessary to know the specific system prompt, understanding how the different types of instructions are integrated can help us write better instructions for our agents.

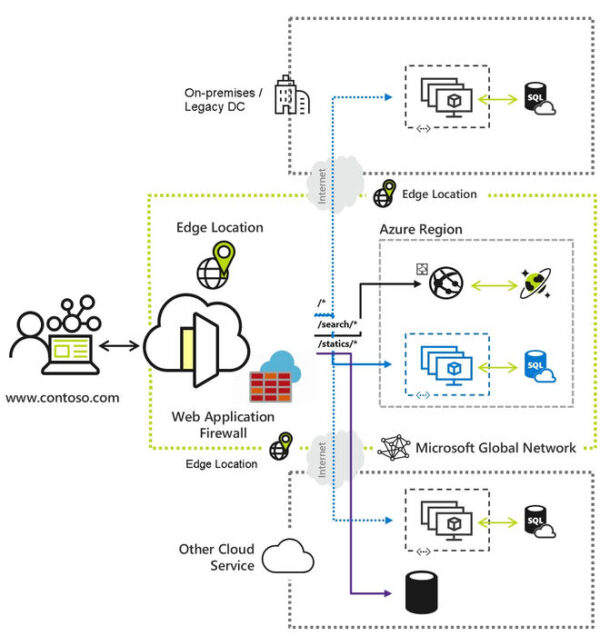

Autonomous agent, triggers, actions identities and the need for an agent service account

For agents to access information or take actions on various systems, they must have an identity to interact with these systems.

In my opinion, there are two types of access identities: implicit and explicit identity:

Implicit identity – Knowledge Sources (Require authentication): The agent utilizes the current user identity to access knowledge sources. Agent must be configured to require user authentication, and currently, there is no option to use a different identity for accessing these knowledge sources.

❗Currently, autonomous agents lack an implicit identity, which introduces certain challenges that will be discussed next.

Autonomous agents are unable to reference certain knowledge sources. Some knowledge sources require authentication for access, which agents cannot provide autonomously.

The following knowledge sources cannot be utilized by autonomous agents:

- SharePoint

- Dataverse

- Graph Connectors

- AI Builder Prompts

❗It is clear that this is a recognized gap by Microsoft, and it is likely that we will see a solution to this limitation in the near future.

Explicit identity – Triggers & Actions: For triggers, the agent uses the trigger (Power Automate trigger) identity to access the trigger source system. By default, this will be the agent maker identity, but it is possible to change this and select a different account.

For actions, in general for Copilot studio agents we have 2 types of authentications:

- Agent author authentication: The agent uses a connection reference in the background to provide the identity for the action. By default, this will be the agent maker identity, but it is possible to change this and select a different account.

- End user authentication: The agent utilizes the current user identity to authenticate the action. Agent must be configured to require user authentication for this to work.

❗Autonomous agents, by design, do not support “end user authentication” as there is no user identity when the agent starts autonomously.

The need for an agent service account

While there is currently no official guidance from Microsoft on this matter, from my POV I see that for the “Explicit identity – Triggers & Actions” path, you may need to create a service account to represent your agent’s identity.

For retrieval scenarios, the service account will be used to access the required data. This will also simplify auditing this identity across the enterprise to determine which agent has access to what data.

For task-based scenarios, it is essential to ensure that a particular action is performed by an agent’s service account identity rather than an agent’s Copilot Studio maker identity.

Agents’ memory, and what the Copilot Studio autonomous agents are missing (For now)?

I really like the LangChain definition of Memory for agents, according to LangChain (Referencing the CoALA paper):

Memory for agents is a system that allows them to remember previous interactions, which is crucial for creating a good user experience. There are three main types of memory for agents:

Procedural Memory: Long-term memory for how to perform tasks, similar to a brain’s core instruction set. (LLM weights, system instructions, agent code)

Semantic Memory: Information from the conversation or interactions the agent had. This information is then retrieved in future turns and inserted into the system prompt to influence the agent’s responses. (Conversation history)

Episodic Memory: Episodic memory is implemented as few-shot example prompting. Collecting enough sequences enables dynamic few-shot prompting. This method is effective for guiding the agent in performing specific actions correctly, based on previous experiences.

It is clear that Copilot Studio autonomous agents currently have the concepts of procedural memory and semantic memory. However, there remains an absence of episodic memory (At least for now).

The positive aspect is that Microsoft has already included this in their vision, as indicated in the initial announcement about autonomous agents. We simply need to wait for it to be made available.

To know more about this vision you could check Microsoft initial announcement here: Microsoft Copilot Studio: Building copilots with agent capabilities

Autonomous agents’ governance

For sure in any enterprise deployment, the question will arise: how can we govern these autonomous agents (Enable/Disable)?

The positive aspect is that we already have governance control in place through the robust Power Platform data loss prevention (DLP) policies.

We discussed before that the difference between standard agents & autonomous agents is the trigger aspect.

To govern autonomous agents, you can use the Microsoft Copilot Studio connector in Power Platform admin center DLP policies to prevent agent makers from adding event triggers.

What does “human in the loop” mean?

Now, let’s discuss “human in the loop” in the context of agents, define its meaning, and examine how it could impact the use of autonomous agents in your enterprise.

“Human in the loop” refers to a design approach where human intervention is integrated into automated systems or processes. This concept is particularly important in building agents, as it allows for human oversight, decision-making, and corrections at critical points. This ensures that the autonomous agent workflow can benefit from human judgment and expertise, enhancing its reliability and effectiveness.

Currently, Copilot Studio’s autonomous agents lack the “human-in-the-loop” aspect. However, similar to episodic/long-term memory, the good news is that this feature is already included in Microsoft’s vision.

Additional information can be found in this video:

But this means while awaiting the human-in-the-loop aspect to come, we may need to decide which use cases an autonomous agent can handle independently.

We will use Copilot Studio autonomous agents for all our business process, or we may need to give this another thought?!

While Copilot Studio autonomous agents have significant potential to transform enterprise business processes, the missing of the “human-in-the-loop” may not make it suitable for all use cases within the enterprise.

From my POV, there are three types of use cases where agents could be utilized:

1- Retrieval: Use cases in which the agent retrieves information from grounding data, reasons, summarizes, and answers user questions.

Responsible AI: It is important to inform the user that this response has been partially or entirely generated by an AI agent.

2- Side Effect: Use cases where the agent may produce side effects or perform actions within Line of Business (LOB) systems. These actions must be reversible and should not result in significant financial loss.

Responsible AI: It is essential that a responsible human oversees these agents. This individual should review all interactions by agents and be held accountable for any actions taken.

3- Mission Critical: Use cases where the agent may produce side effects or perform actions within LOB systems. These actions can’t be reversible and result in significant financial loss.

Responsible AI: Deploying agents to handle these use cases independently is not advisable. Instead, agents can be utilized to prepare information for a human decision-maker, or their actions should be overseen by a human within a human-in-the-loop workflow.

Given the current state of Copilot Studio autonomous agents, it may be suitable for Retrieval and Side Effect use cases. However, the absence of the “human-in-the-loop” makes it currently inapplicable for the Mission Critical use cases.

To be continued!

Sharing Is Caring!

About the Author

Mahmoud Hassan

Microsoft MVP | Empower enterprises to thrive with Microsoft Copilot & Modern Workplace AI solutions

Reference:

Hassan, M (2025). Microsoft (7) 🎯Microsoft Copilot Studio: The Low-Code Agentic Engine for Enterprise Business Process Transformation | LinkedIn [Accessed: 30th September 2025].