As the end of October creeps nearer and pumpkins begin to appear in windows, it’s hard to resist the fun of spooky season. A holiday designed around enjoying treats, haunted houses and jump scares makes the colder, darker evenings a bit more bearable.

But in the tech world, the things that go bump in the night are not hiding in the shadows. They are hidden in emails, embedded in files or whispering in cloned voices that sound just like someone you know.

The truth is that the scariest monsters today live in our devices, and unlike ghosts, they can cause real-world harm.

This Halloween, let’s take a closer look at the threats haunting our digital world and how to face them head-on, before meeting in Dublin this December at ESPC25, where cybersecurity and AI experts will help you stay safe, smart and ahead of the curve.

Modern Cybersecurity Nightmares You Shouldn’t Ignore

Cybersecurity has always required vigilance, but 2025’s threat landscape is a whole new kind of chilling. Attackers are faster, more sophisticated and increasingly powered by AI. Here are three of the most haunting challenges facing organisations today.

1. Deception at Scale: Deepfakes, Cloned Voices and Smarter Phishing

AI has transformed social engineering. No longer confined to poorly written emails or fake invoices, attackers can now replicate an executive’s voice or generate a video message that looks completely authentic. A convincing call from a “CEO” instructing a transfer, or a perfectly phrased phishing email referencing internal projects, can fool even experienced professionals.

These AI-driven impersonations rely on the accessibility of generative tools. Publicly available data, social media posts and meeting recordings provide everything needed to mimic tone, phrasing and personality.

Defence now depends less on spotting typos and more on verifying context. Multi-person authorisation for financial actions, regular awareness training and clear internal communication protocols are critical. When in doubt, confirm the source before acting.

2. The Silent Risks of Helpful AI

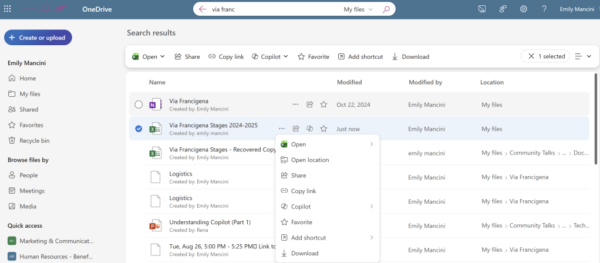

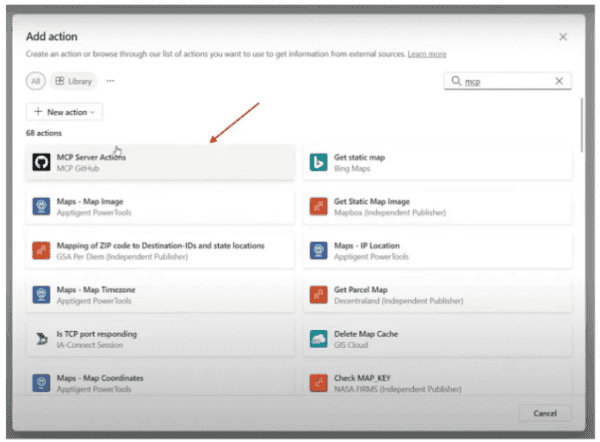

Digital assistants and generative tools are rapidly becoming essential to daily work. From scheduling meetings to summarising reports, they are designed to make life easier. Yet behind that convenience lies risk.

AI agents often have access to sensitive data such as calendars, contacts and internal messages. Without proper governance, that information can be exposed, stored insecurely or even fed into external systems outside IT’s control. The growing phenomenon of Shadow AI, where employees use unapproved tools to save time, compounds the danger.

The solution is not restriction but responsible adoption. Organisations need clear usage policies, regular audits and open communication about what tools are approved and why. Awareness and transparency are far more effective than fear or blanket bans.

3. The Race Against the Unknown

As technology evolves, new vulnerabilities appear almost overnight. Zero-day exploits, AI-driven malware and manipulative prompts hidden within data have become increasingly common. Attackers are experimenting with ways to target the AI systems themselves, exploiting how models interpret or process content.

Security is no longer about patching a single system; it is about resilience. That means keeping every device updated, enforcing security policies across personal and corporate equipment, and continuously testing how systems respond under pressure. Most of all, it means ensuring teams know what to do when something feels wrong.

The human factor remains the most important element of any defence. When employees are confident enough to question unusual requests or escalate concerns, threats can be stopped before they spread.

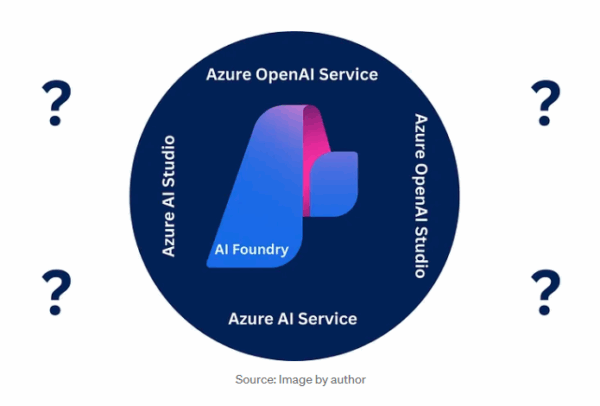

Should We Be Scared of AI?

AI does not think for itself. It recognises patterns and performs tasks within the limits of its training. It cannot act beyond the data it has been given. What it can do, however, is amplify both human potential and human error. Poorly designed models can spread bias or misinformation, while responsible AI use can revolutionise productivity and insight.

The Real Threat: Being Left Behind

The real scare story is not AI replacing humans; it is humans failing to evolve with AI.

The professionals thriving in the next decade will be those who understand and harness AI, not those who resist it. Tools such as Copilot are rapidly becoming standard workplace utilities. Knowing how to leverage them will be as fundamental as knowing how to use email once was.

So instead of fearing AI’s rise, the smarter move is to get ahead of it, and ESPC25 is designed to help you do exactly that.

Learn. Adapt. Stay Ahead.

This December, join the brightest minds in tech at ESPC25 in Dublin, where AI and cybersecurity converge in one of Europe’s premier technology conferences.

You will gain insights from global experts, hands-on workshops and real-world case studies that show you how to:

- Strengthen your defences against today’s cyber threats

- Use AI to empower, not endanger, your organisation

- Stay adaptable in a rapidly changing tech landscape

Whether you are in IT, development, business leadership or security, ESPC25 is where you turn fear into forward-thinking.

👉 BOOoOoOk your ticket for ESPC25 today to future-proof your skills.

Your Halloween Treat Awaits

Do not miss our special Halloween Eve “Trick or Treat” inbox surprise – a little something from the ESPC team that may just give your inbox a fright.