During OpenAI’s recent DevDay, a smaller but important announcement was JSON mode for GPT-4 and GPT-3.5 models! This means you can strictly tune the outputs from GPT models to produce JSON, pathing the way for structured data scenarios. This allows for capabilities such as, turning chat prompts into formatted orders for e-commerce applications or as I’ll explore today the use of GenAI for Data Science applications such as text analytics.

In this blog post, I’ll explore a hypothetical scenario in which we’ll pass customer customers reviews through the Azure OpenAI service to summarise not just review sentiment, but also recommended improvement steps that both a retailer and manufacturer could take based on the customer feedback. This scenario simply isn’t possible with traditional text models.

I haven’t done any data science work for several years now, but with the advent of GenAI Copilots the barrier to entry and in my case, re-entry has become significantly lower. To create this post, I leveraged the assistance of both ChatGPT and GitHub Copilot to fill gaps in my knowledge and to write and de-bug code. I was genuinely impressed with how much I could achieve with these tools, and I’ll include prompts and examples throughout.

Creating the Reviews

Before we can process reviews, we need to create them first. In a real-world situation, we would import reviews from Google or other aggregators, but for our hypothetical case, we’ll ask ChatGPT to generate some samples for us. I made 9 reviews in total: 3 positive, 3 neutral and 3 negative. I’m showing the prompt that I adjusted each time to produce different sentiments, and an example of a negative review. I then saved these reviews in a TXT file. In hindsight I could have done via the API, but this isn’t the focus step of the project.

The Prompt:

I’m creating sample data to experiment with text analytics capabilities. I would like you to create three positive reviews about a small portable BBQ from the brand Cookup that might show up on the review page of a retail store. Each review should have a comment about the store experience and or the product itself.

And a sample negative review:

“I got the Cookup Portable BBQ from Outdoor Essentials and it’s been a frustrating experience. The BBQ is flimsy, and the ignition system is unreliable. I tried to get assistance at the store, but the staff were dismissive and not helpful. Overall, a waste of money and time. I’ll be looking elsewhere for my outdoor cooking needs.”

Preparing our Python Environment

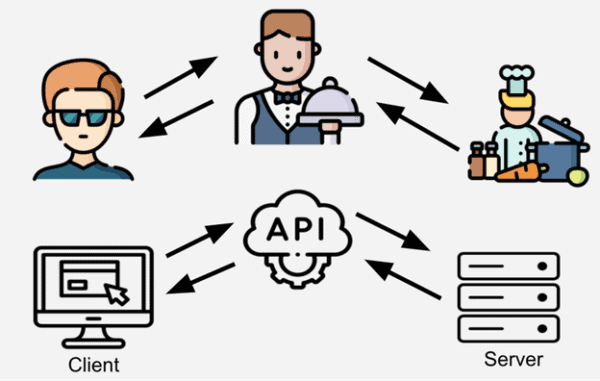

To analyse the reviews, I configured the Azure OpenAI completions module to produce JSON output. This can be configured by using a system message and user message. Which act as our task description and task respectively. I’m using version 1.3.8 of the openai Python library for today’s examples as JSON mode is supported. I was unsure about how to configure the openai API, so I copied and tweaked code directly from the Microsoft docs. How to use JSON mode with Azure OpenAI Service – Azure OpenAI | Microsoft Learn

The code provided on docs works well, but it outputs the model response into a variable, I wanted a function where I could tweak the prompt inputs and it use repeatably. Over to GitHub Copilot for this. By simply copying the code into Copilot and providing a prompt, I got the function I needed.

To finish up, I simply adjusted the output by parsing it through the json.loads() function and changed the function name. Final code is as follows. def get_chat_completion_json(system_content, user_content): response = client.chat.completions.create( model=AZURE_OPENAI_MODEL, response_format={“type”: “json_object”}, messages=[ {“role”: “system”, “content”: system_content}, {“role”: “user”, “content”: user_content} ] ) return json.loads(response.choices[0].message.content)

Prepping the Dataset

This time we again turn to GitHub Copilot, I had all the reviews newline separated in my TXT file, but I couldn’t remember how to load them into a data frame for analysis, so over to Copilot! The original code created gave me an error, so I simply told it as much, and I received a new working approach.

System Prompts and Review Summaries

Now that I had the Python functions built, I started to process the text data through GPT-4. Here prompt engineering was key, as I not only had to specify that I wanted JSON output, but I also provided examples of what each key value pair should contain. Below is the final prompt.SysMsg = “”” You are a helpful assistant designed to output JSON. You will be given a review for a product and you should output the following JSON format. { “sentiment”: “positive” or “negative” or “neutral”, “recommended_store_action”:”If the review is negative or neutral, what is an action we can take to improve the customer experience in the retail store, if it positive review or you’re unsure simply state not applicable”, “recommended_product_action”:”If the review is negative or neutral, what is an action we can take to improve the product, if it positive review or you’re unsure simply state not applicable” } “””

With this prompt set as the system message and the review as the user message, I tried it out on one of the negative reviews with a great result.

With one review processed, it was simple enough to apply this recursively to all reviews. In this scenario I leveraged Copilot to create the iterative function and the output of this process is below.

Aggregating Feedback and Commentary

It’s worth a pause here to remark at what’s be done so far, with plain text customer reviews we were able to extract not only how a customer felt, but also what next step actions we could take as a business to improve based on feedback, a traditionally highly manual approach. To round out this blog I put each together recommendation and passed it back to GPT-4, this time to ask the model to summarise the requests and evaluate what changes could be made if we prioritised actions on effort to implement and impact. This helps the model focus on outcomes and is more token efficient then parsing every review into the model at once. SysMsgReview = “”” You are a helpful assistant designed to help provide a recommendation for best path forward for product improvement. Given many recommended actions for remediation, you should consider which recommendations might be the easiest to implement for our portable barbeque product, and which recommendations are the most requested. “””

Here was the output.

To determine the best path forward for product improvement for the portable barbecue product, we need to consider factors such as the impact on customer satisfaction, cost, implementation time, and feasibility. Looking at the suggestions:

1. Design a new model with a larger grilling area while maintaining portability: This could significantly increase customer satisfaction for those who require more space. However, redesigning and producing a new model could be time-consuming and expensive.

2. Investigate BBQ design for potential improvements in heating efficiency and temperature management instructions or tips: This may be easier to implement as it could involve tweaks to the current design rather than a full redesign. Providing better instructions or tips could be quite straightforward and cost-effective.

3. Investigate heat distribution issues and design improvements: This is critical as even heating is a fundamental aspect of a good BBQ. Changes could range from minor tweaks to significant redesigns, depending on the severity of the issue.

4. Reassess the build quality and ignition system for durability and reliability: This is essential for customer trust and product longevity. Improving the quality of materials or manufacturing processes might be moderately difficult but important for long-term brand reputation.

5. Review the BBQ design to improve sturdiness and heat control consistency: This might be easier to implement compared to a complete redesign and is crucial for user experience.

6. Improve ease of setup and ensure even heating; making a version with more grill space: Easing the setup process could involve clearer instructions or minor design changes and is thus relatively easy to implement. Providing more grill space without compromising portability may require a significant redesign.

Based on customer feedback frequency and ease of implementation, the prioritized actions might be:

– Easiest to Implement: 1. Provide clearer instructions or tips for optimal temperature management. 2. Improve ease of setup with better instructions or minor design adjustments.

– Most Requested (Assuming you have customer feedback indicating this): 1. Design improvements for even heat distribution. 2. A larger grilling area to accommodate customer needs.

It would be important to gather data on customer feedback to confirm which issues are most frequently reported. Additionally, conducting customer surveys can help prioritize which improvements would be most valued by users.

What a powerful outcome! It’s worth noting today I only scratched the surface of what’s possible, there are deeper and more interesting questions to ask, filters to be applied and probably much better code for what’s been created.

Thanks for reading to the end and I hope you enjoyed this post. You can find the notebook here, ready for you to connect your Azure OpenAI service. GenAIforTextAnalyticsExample/CustomerReviewProcessing.ipynb at main · DCsillag/GenAIforTextAnalyticsExample (github.com)

This blog is part of Azure AI Week. Check out more great Azure AI blogs and webinars today.

About the Author:

Retail & Commercial Real-Estate Technology Strategist at Microsoft

Reference:

Csillag, D. (2023) Generative AI for Data Science – Azure OpenAI JSON Mode. Available at: https://www.linkedin.com/pulse/generative-ai-data-science-azure-openai-json-mode-daniel-csillag-iztcc/?trackingId=TqwRSoZKStKFs8iofKtyTQ%3D%3D [Accessed: 12th January 2024].