In my previous blogs I discussed the various use cases how organizations are adopting Generative AI . With Generative AI solutions the shift is towards anyone who can consume an API can infuse AI into their applications, you don’t need a data science team! It’s more about the application developers, another important team is the Data Engineering team. If you want to know the nitty gritty of the foundational models, then yes, the data science team is your go-to team.

Let’s understand the stack, everyone talks about the models be it Open AI’s ChatGPT or be it Claude from Anthropic and a plethora of foundation models. But that is just the tip of the iceberg, with organizations it’s all about how my data can be used with these models. What is my monitoring stack, how do I do LLMOps (for a lack of a better word), how do I scale and what about my costs, the most important item on the list security, privacy!

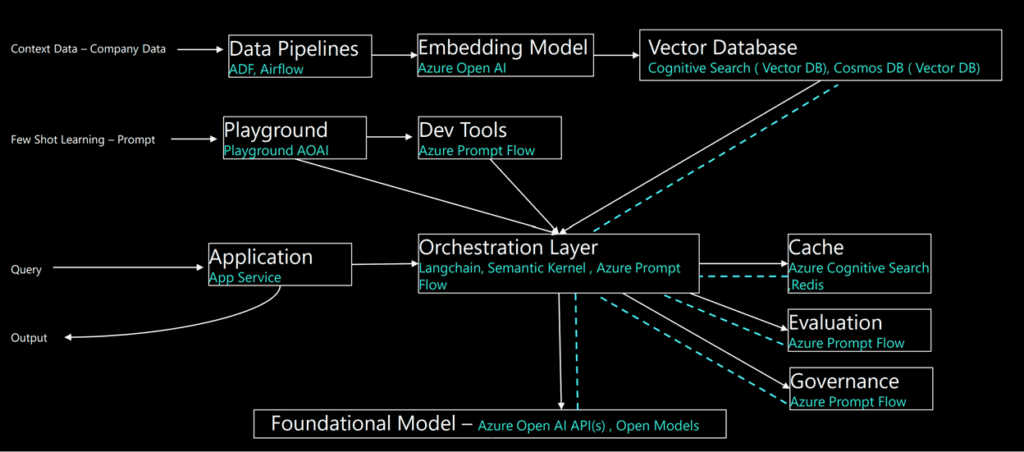

Let’s start with a reference architecture that a Generative AI solution built for an enterprise would encompass of, I am going to share based on the Azure Stack:

Depicted based on Azure Services, built on https://a16z.com/emerging-architectures-for-llm-applications/ architecture.

Let’s start at the bottom layer, the foundation model.

Generative AI foundational model:

Azure Open AI:

When we talk about Generative AI foundational models, Microsoft has entered a partnership with Open AI, where in we get the goodness of Open AI Models within Azure.

What does this mean for our customers/ partners, they can access the ChatGPT, GPT 4.0 models within their Azure tenant.

Azure has a pre-existing stack of AI services, Cognitive services are pre-trained models, for e.g.: Speech to Text, Form Recognizer (OCR++), Vision Services and many more. Azure Open AI services are part of the Cognitive Services stack.

What is part of the Azure Open AI models family?

As part of the Azure Open AI models, there is support for GPT-4 token size support ranging from 8K- 32K. GPT 3.5 models supporting token size of 4K -16K, embedding models text-embedding Ada, whisper model, Dall-E and fine tuning models.

These are models that are exposed as APIs and can be consumed through any application stack.

Why do customers love Azure Open AI?

For every customer, their data is the most important asset. While trying to embrace Generative AI in their organization stack, one item that is non-negotiable is their data, the privacy and security of their data.

1. That is what Azure Open AI promises to deliver. Spinning up an instance of Azure Open AI is like having a private Open AI instance in your Azure tenant.

2. Microsoft has published these Azure Open AI Guidelines:

Your input and output, your embeddings, and your training data:

· are NOT available to other customers.

· are NOT available to OpenAI.

· are NOT used to improve OpenAI models.

· are NOT used to improve any Microsoft or 3rd party products or services.

· are NOT used for automatically improving Azure OpenAI models for your use in your resource (The models are stateless unless you explicitly fine-tune models with your training data).

· Your fine-tuned Azure OpenAI models are available exclusively for your use.

The Azure OpenAI Service is fully controlled by Microsoft; Microsoft hosts the OpenAI models in Microsoft’s Azure environment and the Service does NOT interact with any services operated by OpenAI (e.g. ChatGPT, or the OpenAI API).

3. As we delve deeper into architecture, we can see that the stack is just not the foundational models, but many Lego blocks come together to build an end-end solution. That is something that Azure offers.

4. Foundational models are evolving at a very fast pace, we see new models even from Open AI coming in every quarter (mostly faster than that). For an enterprise to complete the entire development and test lifecycle with newer models, it takes a lot of effort and money. Azure Open AI models are supported for a longer time, giving organizations time to think through and adapt to the newer models if need be.

5. Azure also provides Enterprise grade security supporting Private VNet support, Role based access control.

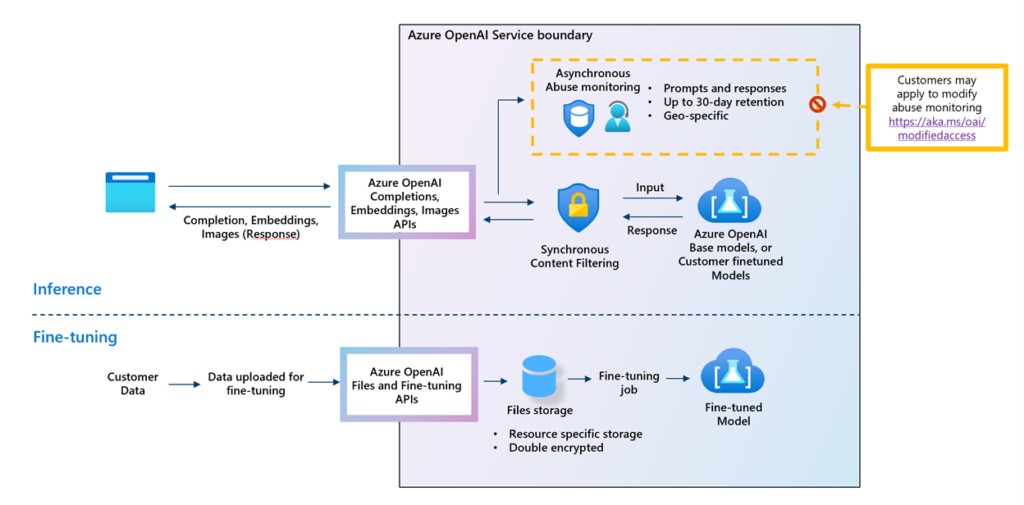

6. With large language models, content moderation is a very important aspect so that harmful content does not seep in and out of the entire experience. Azure Open AI models have default content moderation baked in, what this means is for every input that is provided to the model, and every output that is got from the model, the call goes through a Content Moderation framework. If anything, not appropriate is determined then the outcome is an error.

This diagram explains the flow of the call from user application with instructions, how they are moderated and how the response from Azure Open AI is moderated.

These are some reasons why Azure Open AI is gaining huge traction.

Support for other foundational models–

Azure also supports other foundational models like Llama 2 family models, Llama 2 is the next generation of large language model (LLM) developed and released by Meta. Databricks Dolly, Falcon to name a few.

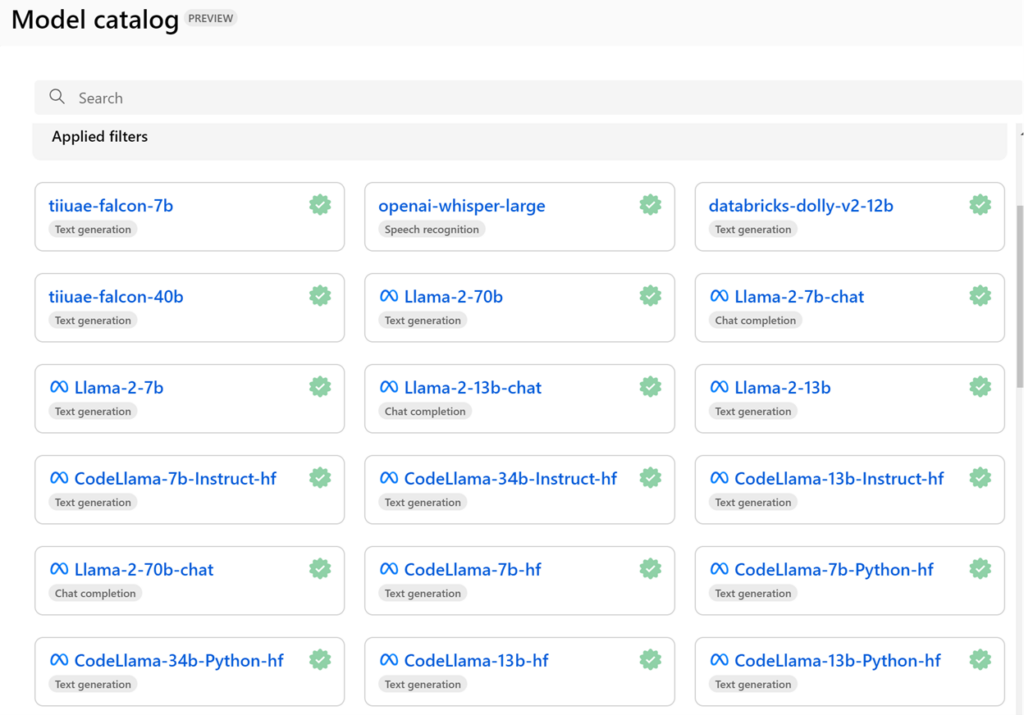

These models are supported as part of the Azure Machine Learning experience, model catalog has these models:

Azure Machine Learning > Model Catalog

This diagram depicts the Azure Machine Learning experience, showcasing other foundational models that can be used within the Azure platform.

You can view the model details as well as sample inputs and outputs for any of these models, by clicking through to the model card. The model card provides information about the model’s training data, capabilities, limitations, and mitigations that the provider has already built in. With this information, you can better understand whether the model is a good fit for your application.

Summarizing I discussed how to think about the technical stack to build an end-end Generative AI solution within an organization, delved deeper into Foundational Models and how Azure is democratizing AI using state-of-the-art LLMs (Large Language Models).

In the next blog let’s uncover the other layers, if you have any feedback/comment do reach out!

This blog is part of Azure AI Week. Check out more great Azure AI blogs and webinars today.

About the Author:

Poonam Sampat is an AI enthusiast , she is currently the Data & AI Partner Lead at Microsoft, working with partners and customers in the ASEAN region, to enable their Data & AI journey on the cloud. Prior to Microsoft, Poonam was engaged as a Senior Architect at Honeywell, building analytical solutions in the Oil and Gas vertical. Building scalable architectures for Internet Of Things data ingestion, transformation and building Machine Learning models to deliver Business Insights. She has also worked with Goldman Sachs , architecting solution for Investment Bankers to help streamline their processes. Poonam is an ISB (Indian School Of Business) alum, passionate about analytics, how analytics can shape industries.

Sampat, P. (2023). Generative AI Tech Stack. Available at: https://www.linkedin.com/pulse/generative-ai-tech-stack-poonam-sampat-0xblc/?trackingId=bndiSZc3SHqqSz0v6Yif1Q%3D%3D [Accessed: 12th January 2024].