Introduction

In the recent build, MSFT has shown us how easy to make your own chatbot assistant based on the information you have and training the existing models from the Azure Open AI service. In this article, we will take a use case of EV cars from a car company and train the assistant to answer questions only specific to the scope of EV vehicles from that car company. The rest of the questions should not be answered. Let’s see how we can achieve it.

Now that we have Azure Open AI service in Preview mode, it is available to the general public, and we can utilize the services and add intelligence and make our applications more powerful. In this article, we will go through the following topics.

- Creating Azure Open AI Service in your Azure portal

- Create an instance from the existing service models.

It is required to have the below additional services created.

- Creating Azure Blob storage: To store the training data.

- Creating the Azure Cognitive Search Index: To index the content from the training data.

- Creating instances of prebuild model and training it with training data.

Creating Storage

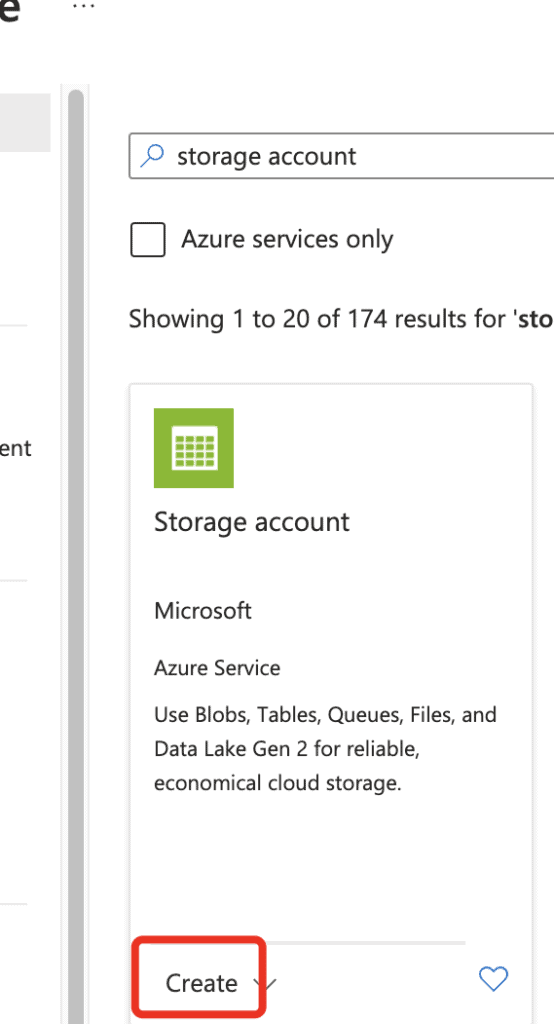

To store the training data, we need the storage account. Please follow the below steps to create the storage account.

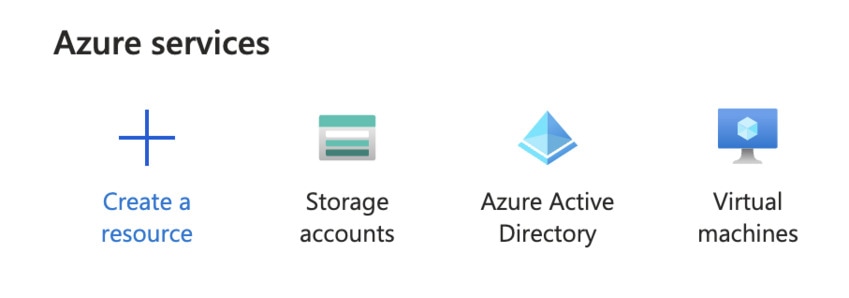

Step 1. Go to Azure portal https://portal.azure.com with your developer subscription or your own subscription.

Step 2. Click on Create a resource.

Step 3. Search for a storage account and click on create.

Step 4. Choose the subscription and create a new resource group. In this case, I have named it as teamsai-rg.

- Storage account name: This must be unique. In this case, I have entered as trainingdatavayina1

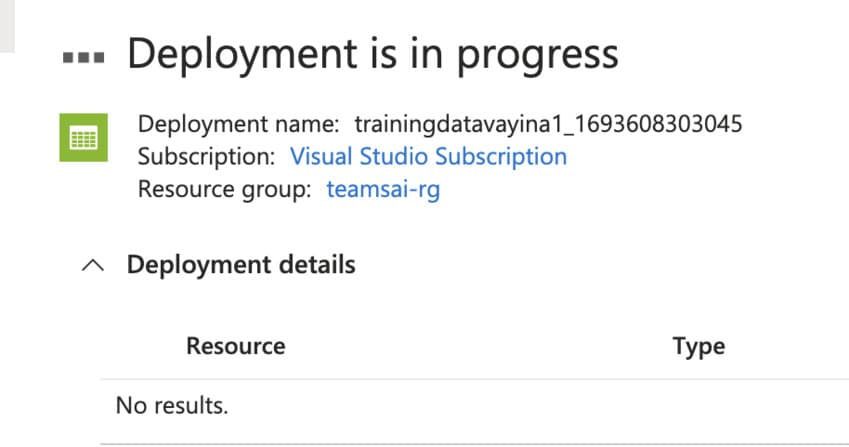

Step 5. Click on ‘Review and Create’ and create.

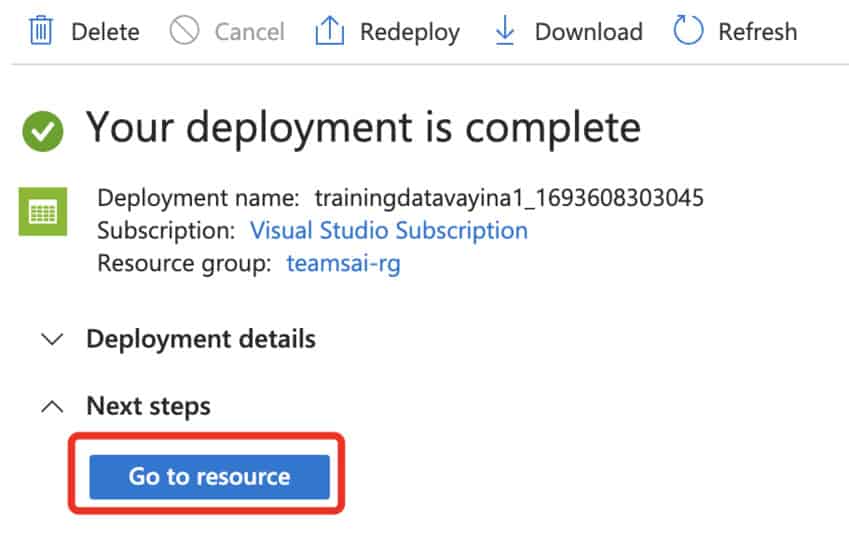

Step 6. Once the deployment is complete, you should be getting a message ‘Your deployment is complete’. Click on go to resource.

Step 7. Validate the storage account name and other details.

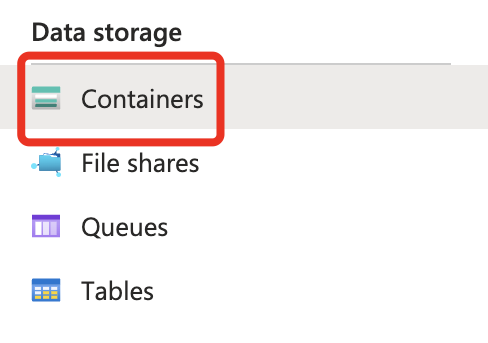

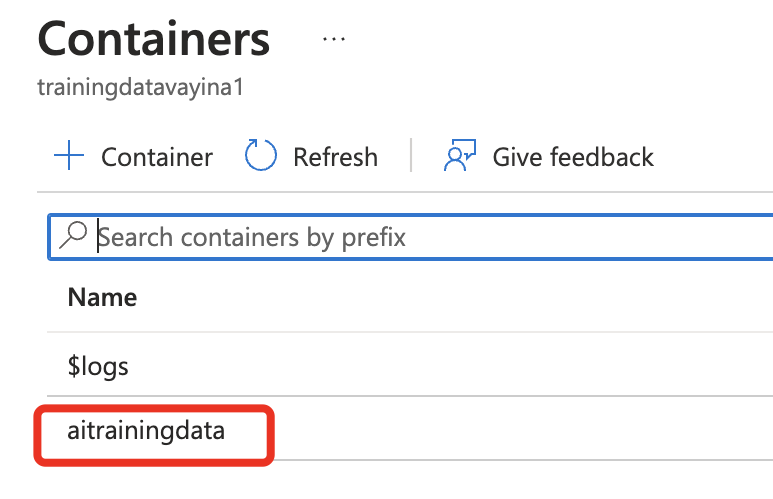

Step 8. On the quick launch, under ‘Data storage’ click on ‘Containers’.

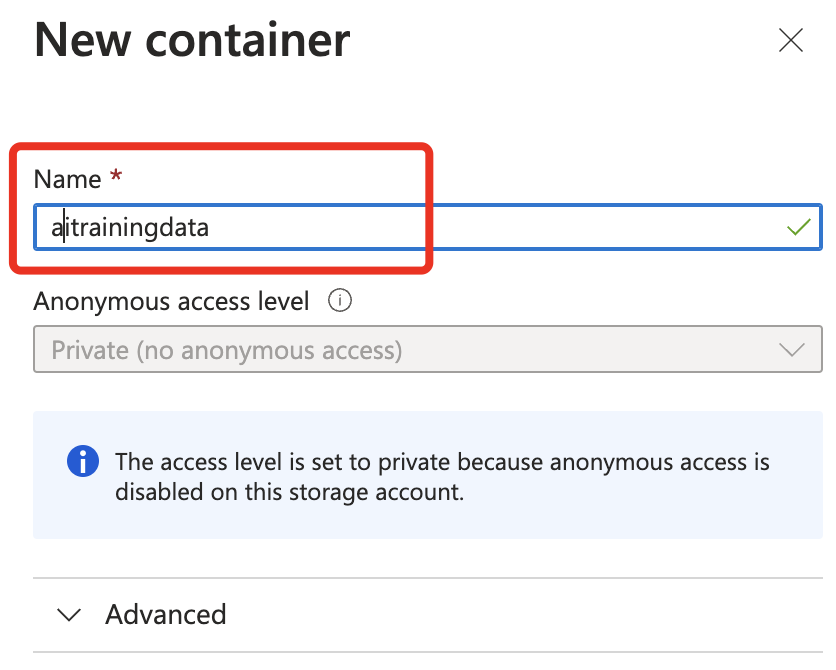

Step 9. Name the new container as ‘aitrainingdata’. Click on ‘Create’.

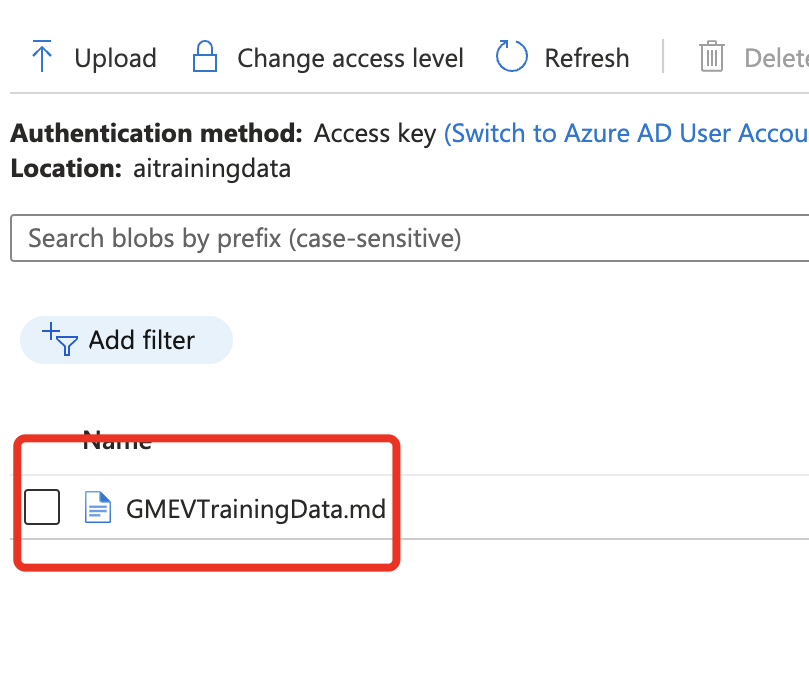

Step 10. Click on the container ‘aitrainingdata’ and upload the training data markdown file. In this case, I have attached the file GMEVTrainingData.md. This is sample data that is already attached in this article.

The above steps conclude with creating a storage account and creating and configuring the container with the training data.

Creating Azure Open AI service

Creating the Open AI service is a straight forward process. Please follow the below steps

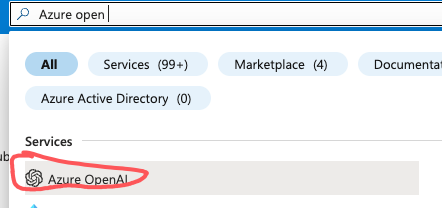

Step1. Go to the Azure portal (https://portal.azure.com) and search for ‘Azure Open AI’.

Step 2. At the very first time, you would see the following screen that says there are no other Open AI to display, and there is a button that says ‘Create Azure OpenAI’. Click on it.

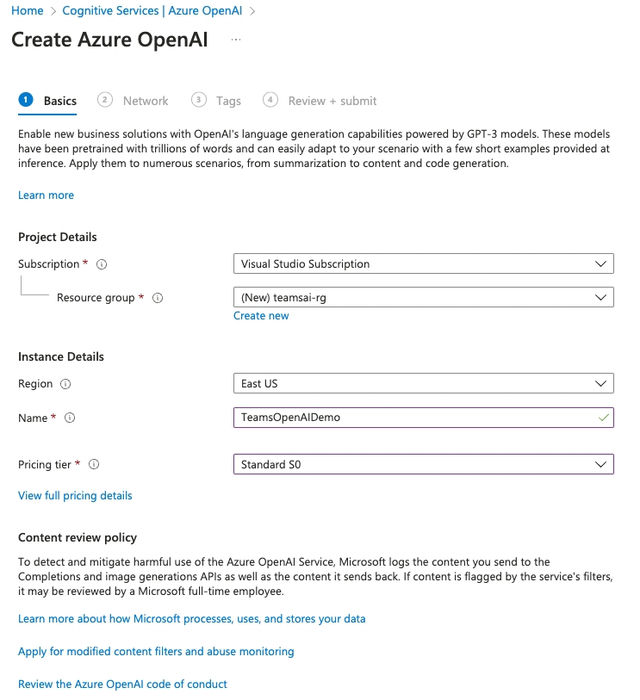

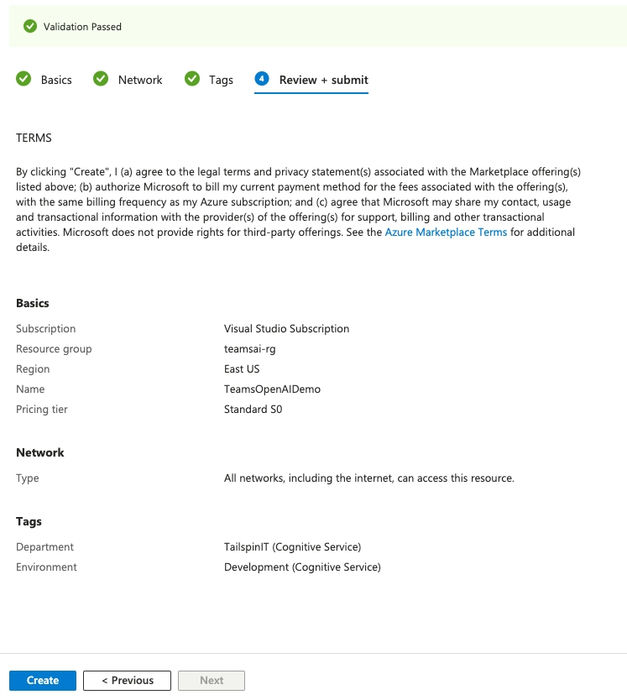

Step 3. Enter the following details in the ‘Basics’ tab as shown in the screen capture.

- Subscription: your subscription name

- Resource group: I have chosen ‘teamsai-rg’. It can be anything.

- Region: Select the region that is closest to you. I have chosen ‘East US’.

- Name: I have given as “TeamsOpenAIDemo”. You can name it accordingly.

- Pricing Tier: S0

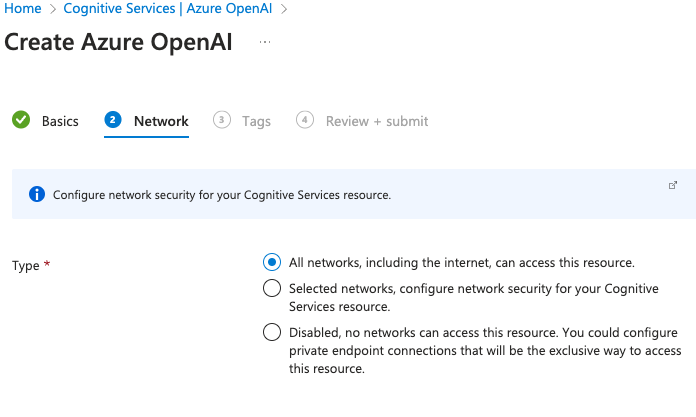

Step 4. In the ‘Networks’ tab, please leave the default, which is ‘All networks, including the internet, can access this resource.

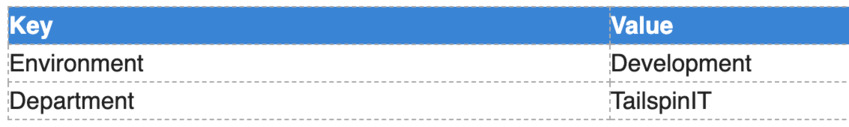

Step 5. In the tags section, I have given the following. You can leave the defaults and go to the next section. MSFT recommends giving tags to your deployment, as it will help in managing the resources by implementing policies and managing the costs and resources.

Step 6. In the ‘Review + Submit’ section, click on ‘Create’.

Step 7. It would take around 5 – 7 minutes to finish the deployment; once the deployment is complete, you would see the following screen that says, ‘Your deployment is complete’. You can click on ‘Go to resource’.

The above steps explain how to create Azure Open AI service using Azure Portal.

Creating Azure Cognitive Search

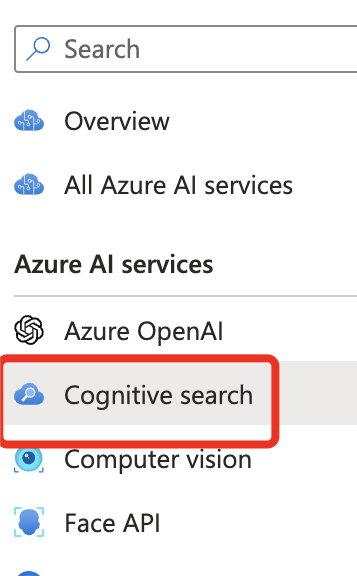

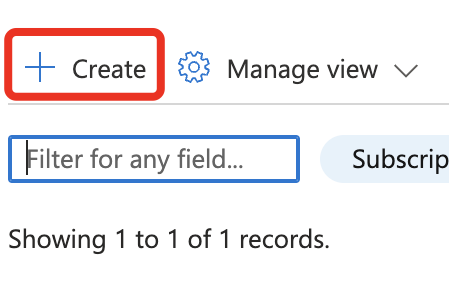

Step 1. From the Azure AI Services, click on ‘Cognitive search’ and click on ‘Create’.

Step 2. Choose the resource group that you previously created for this task. In this case, it is ‘teamsai-rg’.

- The service name needs to be globally unique. Here I have entered it as ‘aitrainingsearchstandard’.

- Pricing tier select ‘standard’. Since the prebuilt model supports only standard models.

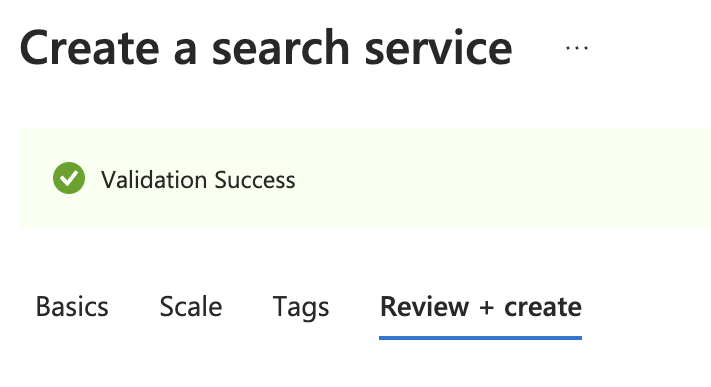

Step 3. Click on ‘review and create’ and once the message ‘Validation Success’ appears, click on Create.

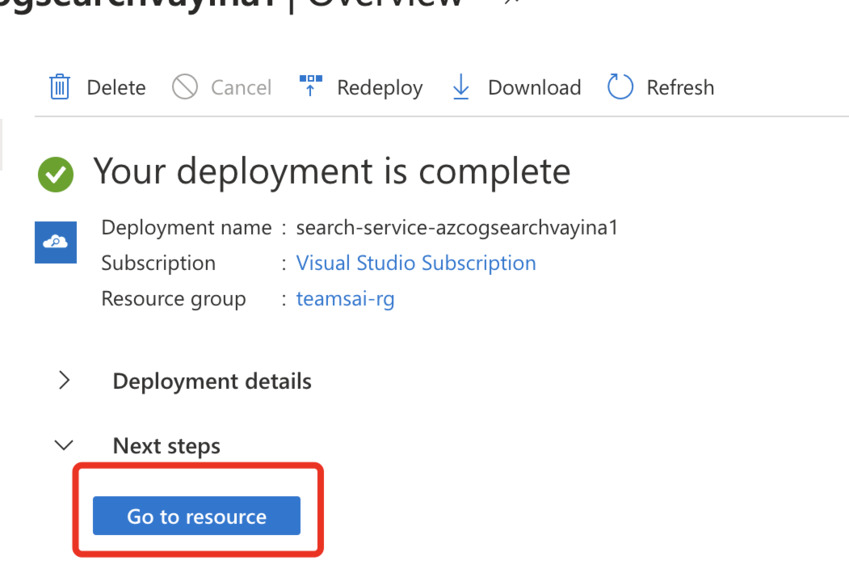

Step 4. Give it a minute or 2. It should give your below message that says the deployment is complete.

Step 5. Validate the URL pricing tier and other properties and make sure the service is created as per your needs.

Creating the Index

In order to create the index, it is required to import the data from the data source. Since we now have the training data stored in the Azure storage, all we need to connect the storage account data to cognitive search. After a few minutes, it creates the index.

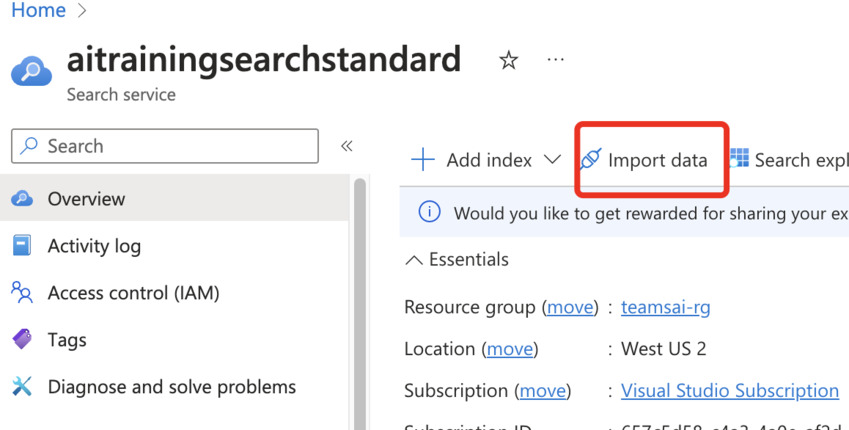

Step 1. From the Cognitive search ‘Overview’ page, click on ‘Import data’.

Step 2. In the ‘Connect to your data’ page, select ‘Azure Bob Storage’.

- Data Source: Azure Blob Storage

- Data source name: It can be anything. I have named it as ‘aitrainingdata’.

- Data to extract: Leave default. The default option is ‘Content and metadata’.

- Parsing mode: Default.

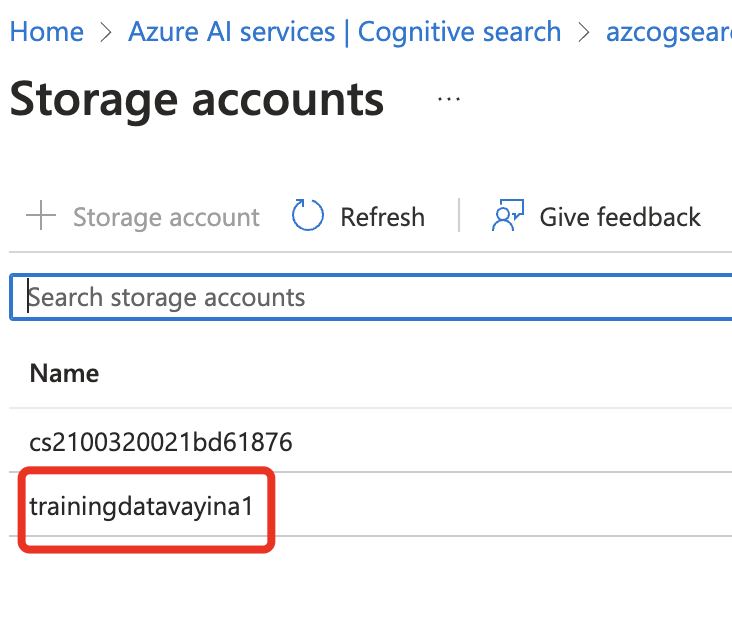

- Connection string: click on ‘Choose an existing connection’. Select the existing storage account and existing container.

- Click ‘Select’ at the bottom of the page.

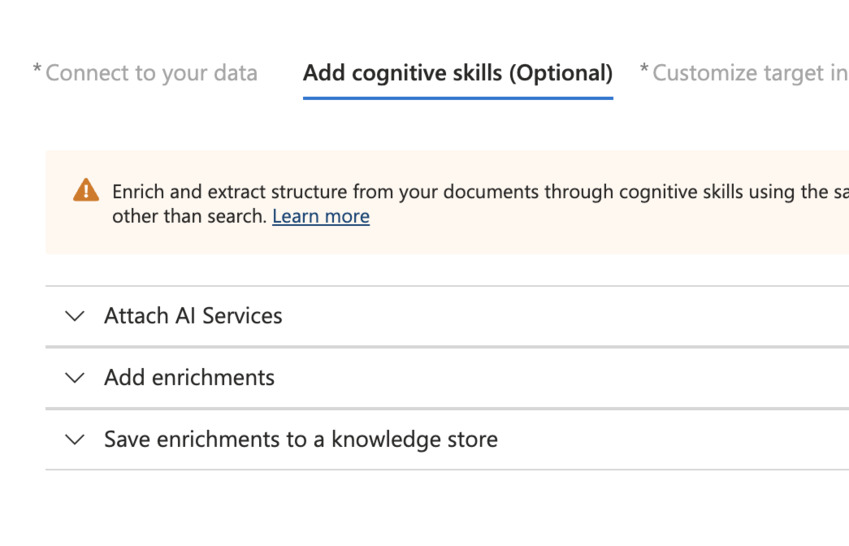

Step 3. In the next ‘Add cognitive skills’ section, leave the defaults.

Step 4. Next, click on the button ‘Skip to: Customize target index’.

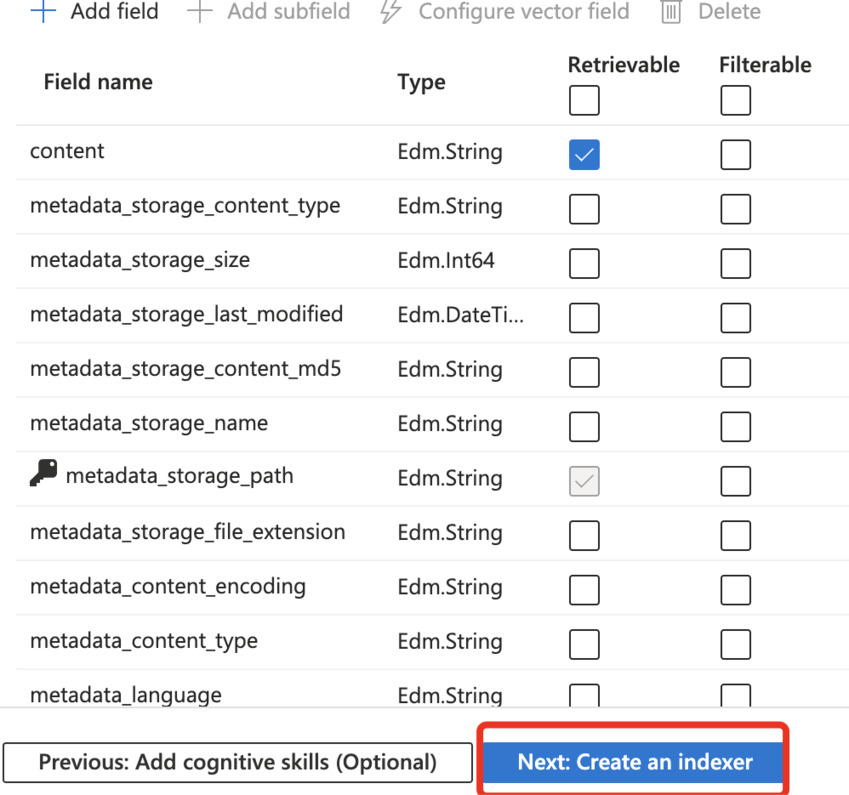

Step 5. Leave the defaults and click on ‘Create an indexer’.

Step 6. In the ‘Create an indexer’ section, leave all the options default.

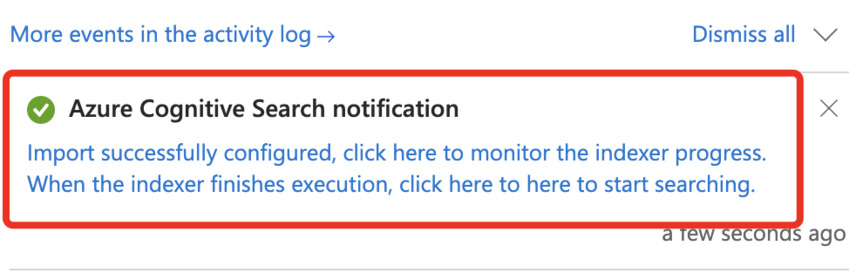

Step 7. Finally, click on ‘Submit’.

Please note that once you click on ‘Submit,’ it will take around 5 to 7 minutes to complete the index.

Checking the Index

Step 1. Under the search management in quick launch, click on ‘Indexes’

Step 2. Click on the index name; in this case, it is ‘azureblob-index’.

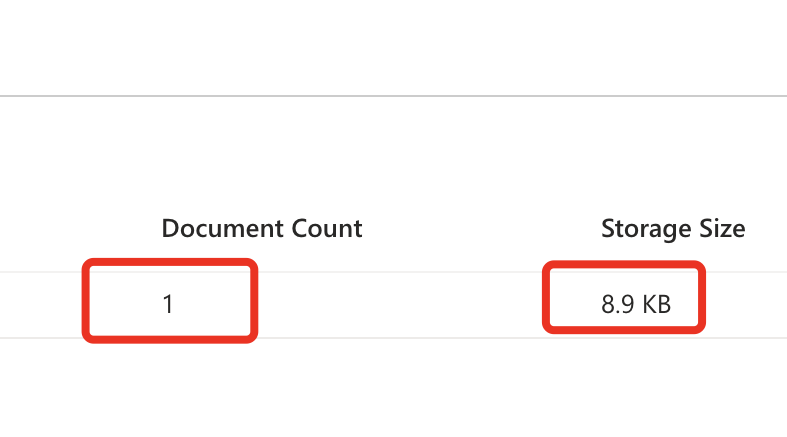

Note. The easiest way to check if the index succeeded or not, you see the document count and storage size should have a value other than 0. Since I am indexing only one document, it is showing the document count as 1 and the respective storage, which is 8.9 kb.

Step 3. Type a query string that is present in the training data. In this case, I am entering as ‘EV’.

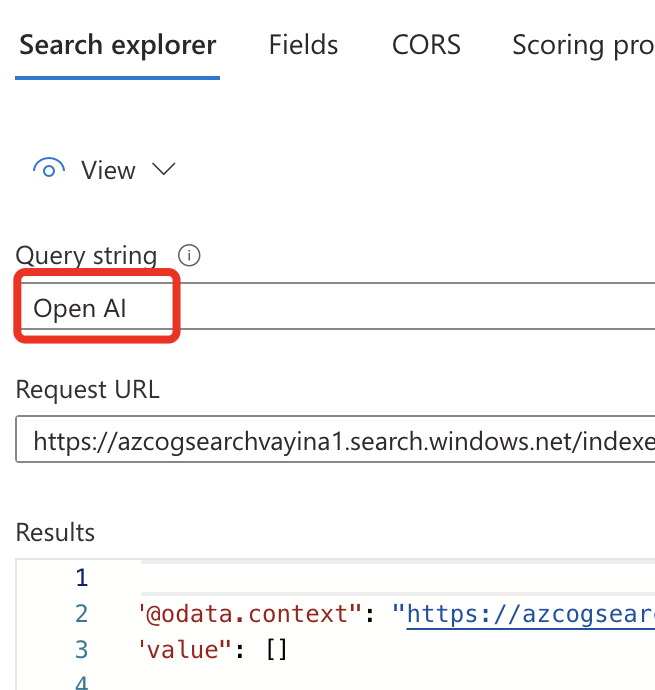

Step 4. Enter one term is not present in training data. I am entering as ‘Open AI’. You can see the value is null since the term is not present in the data.

The above steps conclude the index is created successfully.

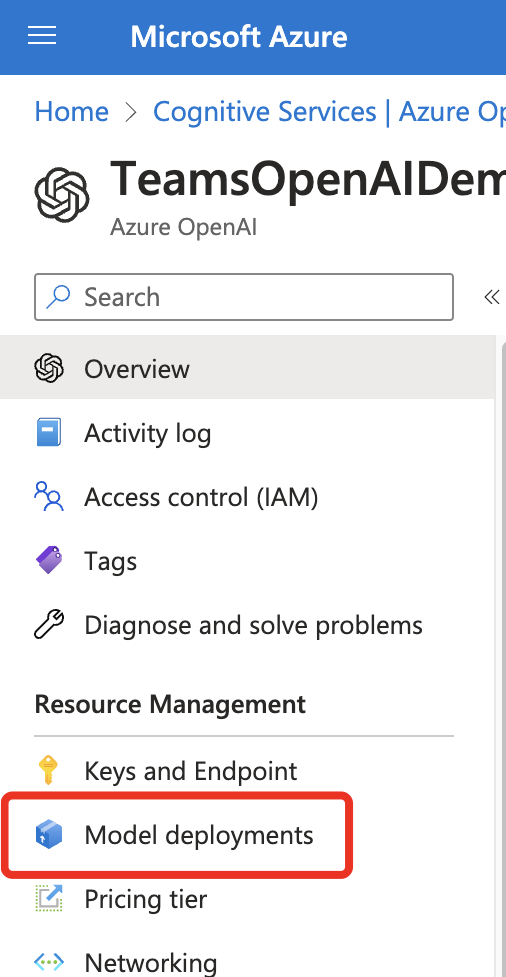

Creating the instance of the Service Model

In this section, we will see how we can take existing service ‘as is’ and watch the results from the model. For simplicity, I am choosing here gpt 35-turbo.

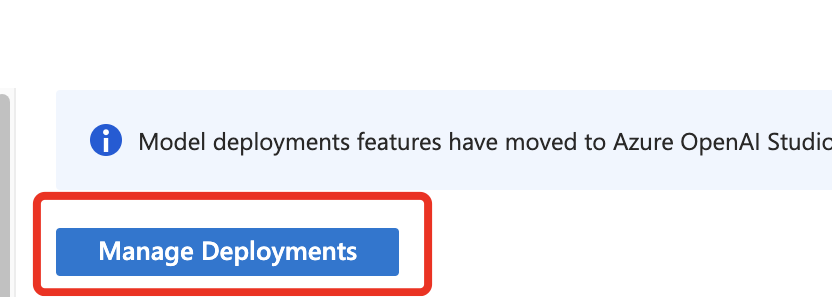

Step 2. Click on ‘Manage Deployments’.

Step 3. Now you would be taken to portal https://oai.azure.com

Step 4. From the ‘Deployments’ page, click on ‘Create new deployment’.

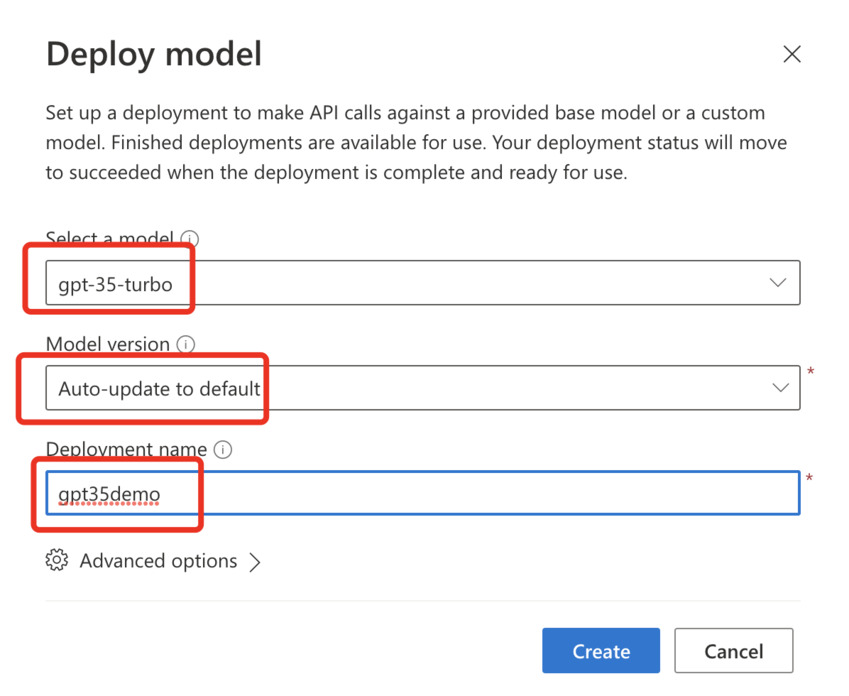

Step 5. For this article, I have chosen gpt-35-turbo and named it gpt35demo and leave the model version to default and clicked on ‘Create’.

Step 6. After a couple of minutes, it gives a message that ‘Deployment created successfully’.

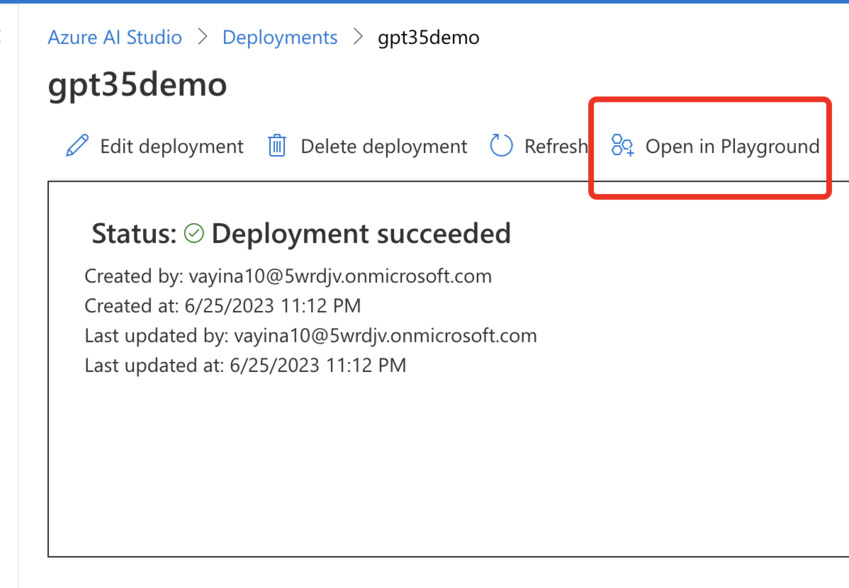

Step 7. Click on the model instance. In this case, it is ‘gpt35demo’, and you will be navigated to the model page that should look like below. It basically shows the properties and status of deployment and gives links to ‘Open in Playground’ where you can test the model and train it for your specific needs.

Step 8. Click on ‘Open in Playground’. This will give you options to check how the AI Conversational model works.

Interacting with Service Model in ‘As-Is’ mode: In this case, we are using the service ‘As Is’ without any training and will observe the results.

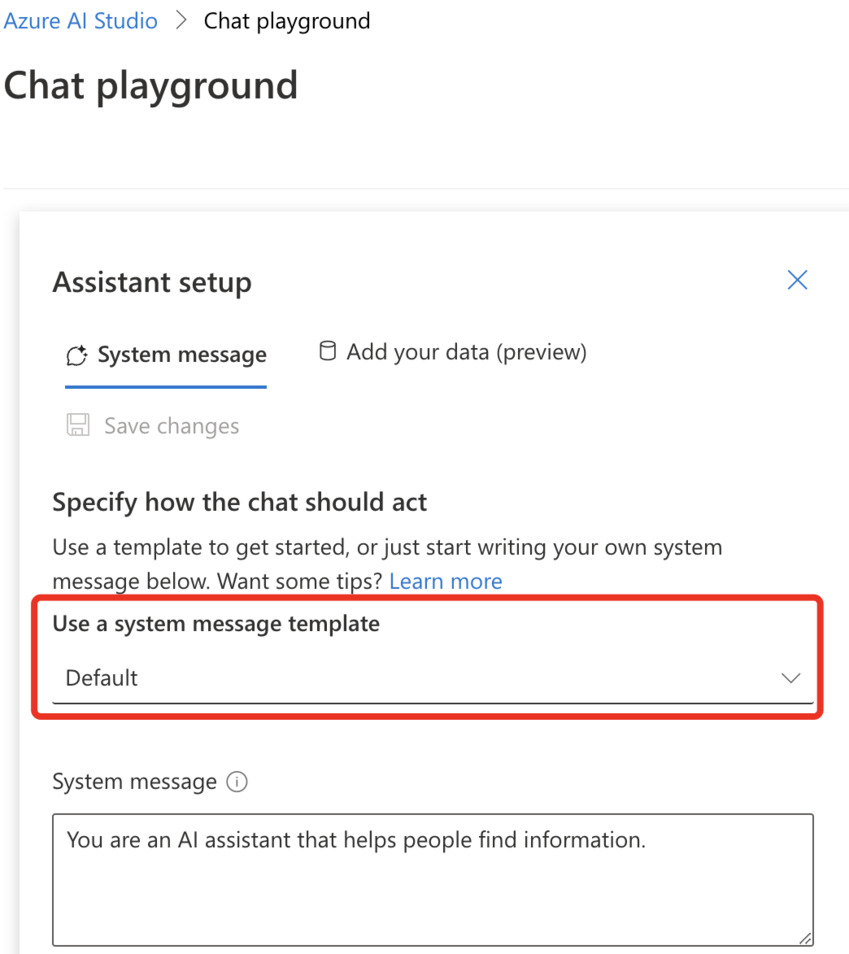

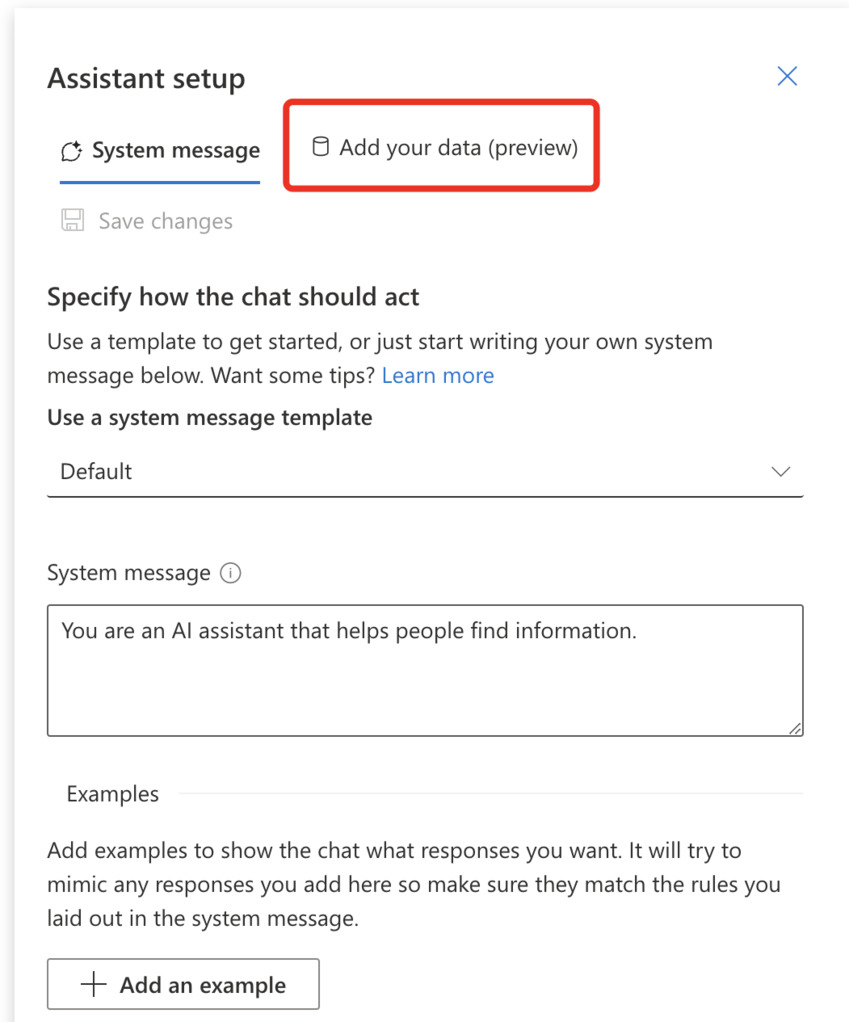

Step 1. In the Playground ‘Assistant setup’ section, select the ‘Default’ template under the ‘Use a system message template’.

Step 2. In the ‘Chat session’, enter the following questions and observe the result set.

- User Message: Hello

It gives the default response.

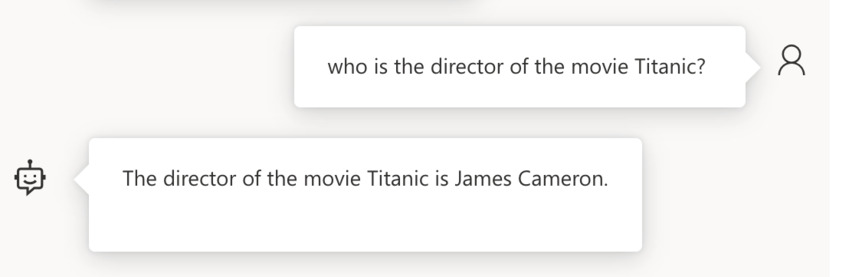

- User message: Who is the director of the movie ‘Titanic’?

- User Message: What are GM electric cars in the market?

As you have noticed, it is giving most of the relevant information for the questions you have asked. The above steps finish interacting with the OOTB service model GPT-35-Turbo.

Training the service model

The idea here is Azure Open AI comes with a couple of service models.

- GPT-4

- GPT-3

- DALL-E

- Codex

- Embeddings

In brief, GPT-4 and GPT-3 understand the natural language and provide the responses specific to the query in a fluid conversation format. DALL-E understands natural language and generates images. Codex understands and translates natural language to code. Embeddings: It is a special format of code that can be understood by machine language learning models. To know more about the service models, please refer to the references section.

For this demo, I have chosen the gpt-35-turbo model to check the conversation. Please note that previous GPT 3 models are text in and text out, which means the model accepts input string and outputs the corresponding string output used in training. Whereas the gpt35-turbo is the conversation in and message out, which means it accepts the conversation, understands the intent, and provides the message output.

Use Case

Let’s try to build a use case. My specific use case is to use the GPT-35-Turbo service model that we have built in the previous section, but I want that chat service to only give answers to a specific application. Any other questions outside of the application should not be answered and should be given a response that is like I could not answer those questions. Let us see how we can achieve this.

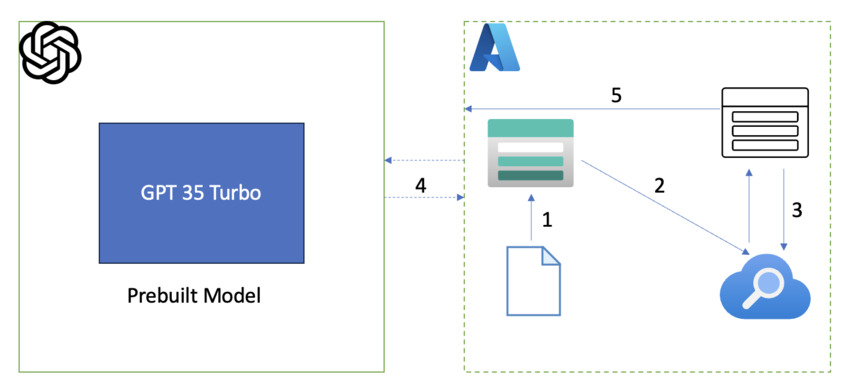

Below is the architecture for the solution that we are trying to build.

- Upload the file into Azure Storage

- Azure cognitive search gets the file from Azure Storage

- ACS builds an index out of it.

- The prebuild model connects with the index to get the response.

- The user interacts with the prebuilt model.

From the previous sections, we have already configured the storage account and the cognitive search. All pending is integrating the services together with Azure Open AI models. Let’s go to the following section for those integration steps.

Steps

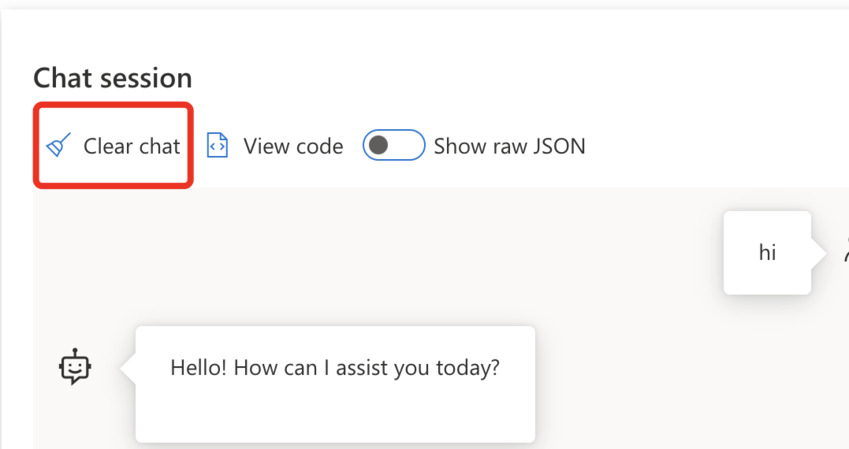

Step 1. If you are following the previous section, clear chat and make sure no previous responses are present.

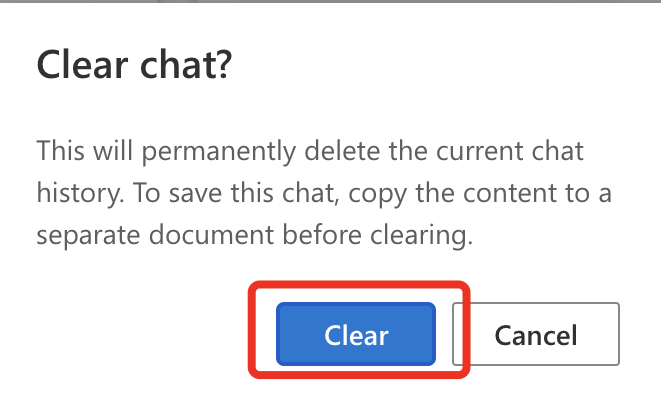

You would be seeing something similar to the below screen. Click on ‘clear’.

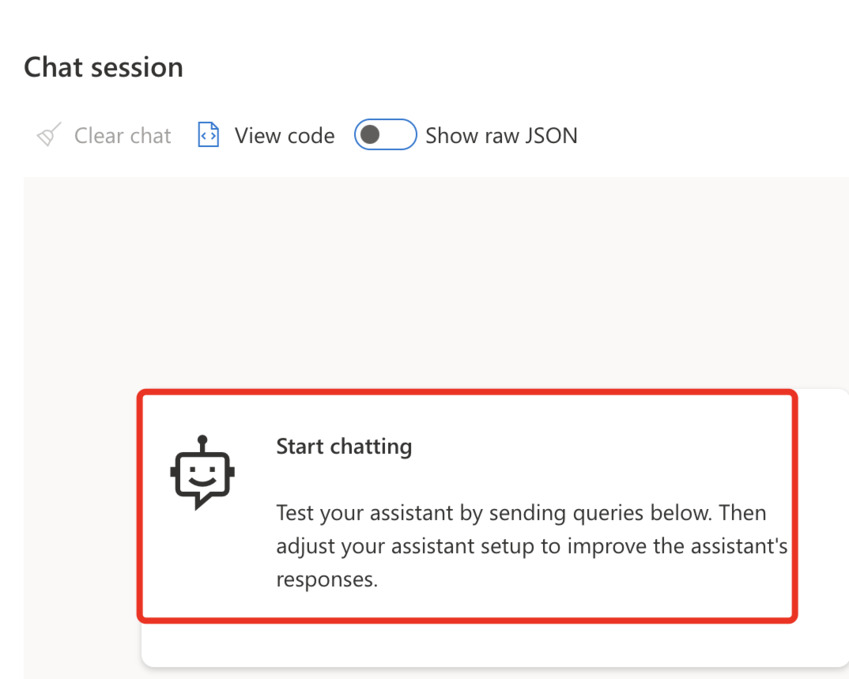

Step 2. Now in the ‘Chat Session’, you would be seeing the below screen that says ‘Test your assistant by sending queries below’.

Step 3. In the ‘Assistant setup’, click on ‘Add your data (preview).

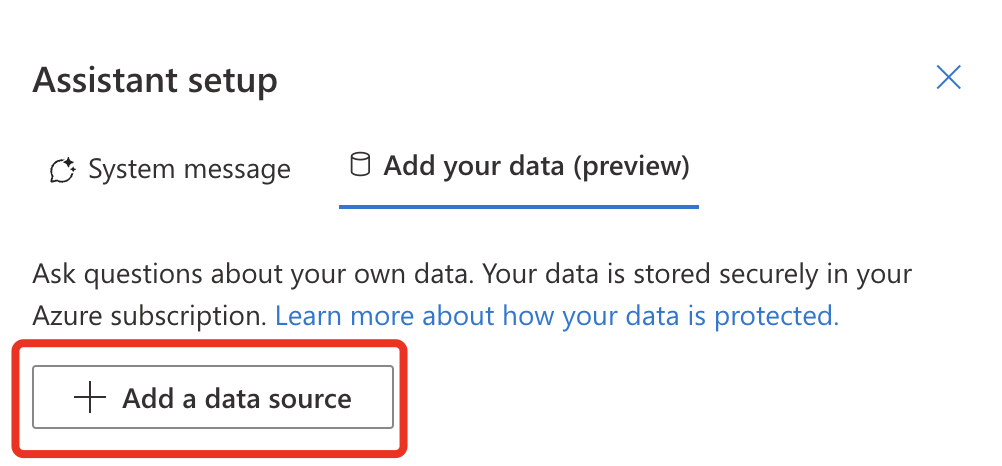

Step 4. You will be getting another button that says ‘Add a data source’.

Step 5. After clicking on ‘Add a data source’, you will be navigated to a different page.

Note. For adding your own data, the free tier is not supported.

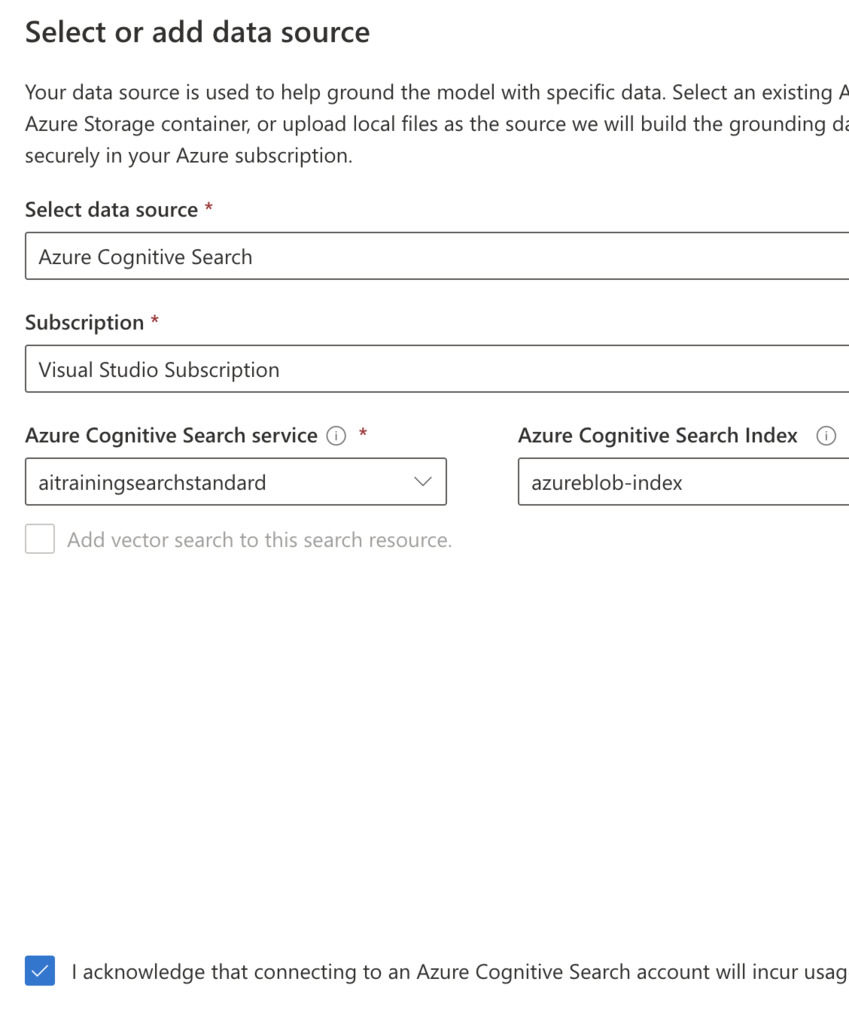

Step 6. If you have an existing cognitive search, the option would be populated with it. For this demo, I am using an existing cognitive search that is configured from the above steps. Once you click on the check box that acknowledges your account will be charged for using Azure cognitive search service, the next button will be activated.

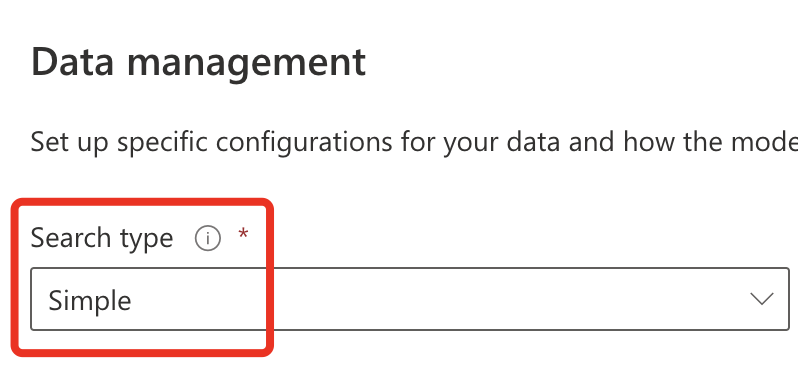

Step 7. Leave all options as default; click on ‘Next’. In the search type, select ‘simple’ and click on ‘Next’.

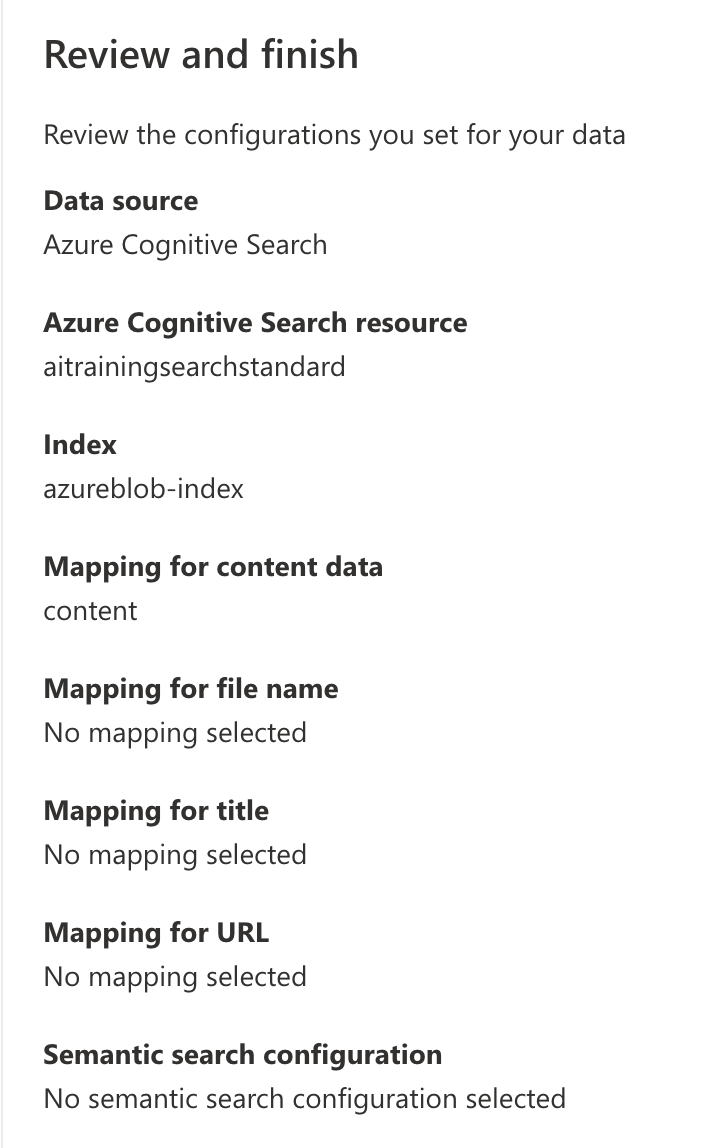

Step 8. In review and finish, review the information and click on ‘Save and close’.

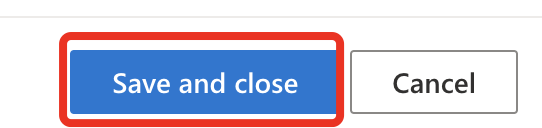

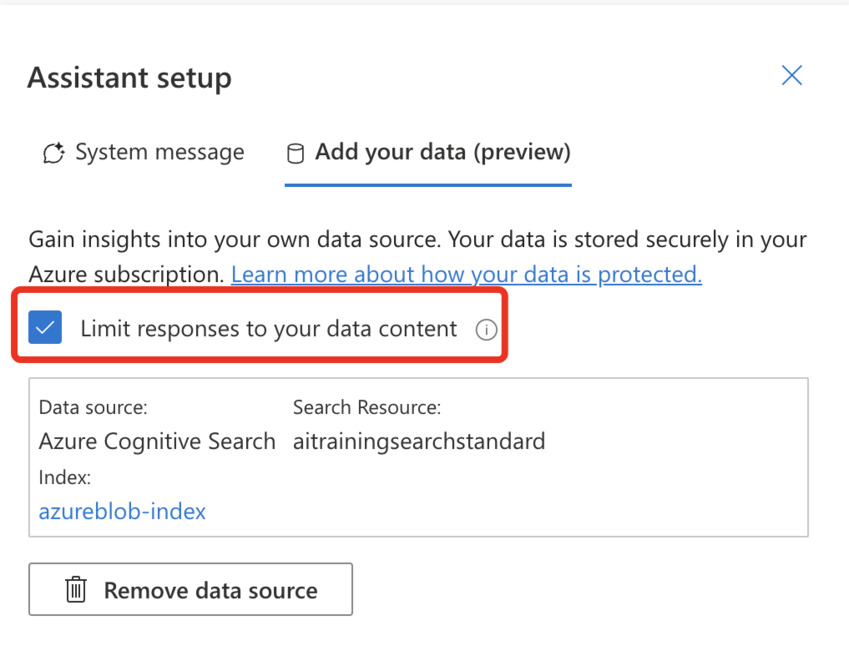

Step 9. In the assistant setup, make sure the option ‘Limit responses to your data content’ is checked.

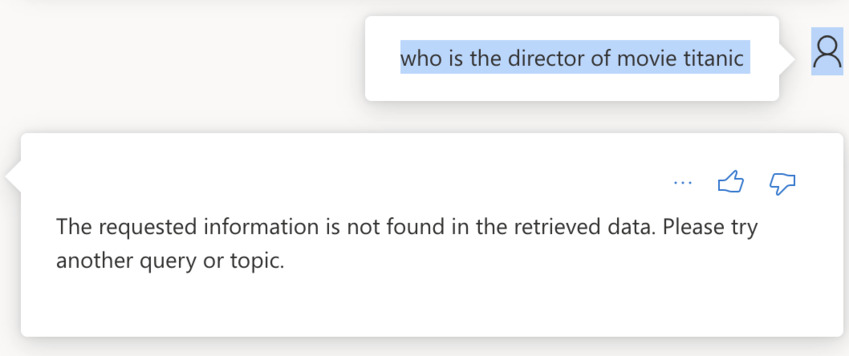

Step 10. In the chat session, type the question, ‘Who is the director of the movie Titanic’. The chat model should say the requested information is not found.

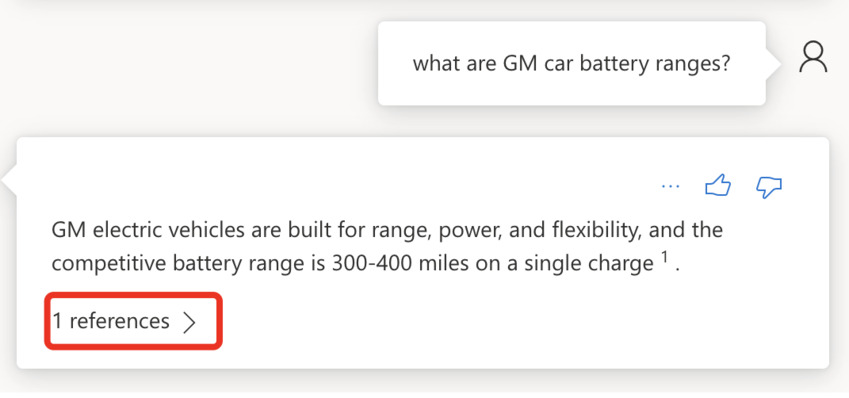

Step 11. Type in question, What are GM car battery ranges? The model should display the information mentioned in the training data. It will also show references for answers it got from.

The above steps confirm that the model is training with your training data.

Training Data

The training data is supported in the following format

- . md (Markdown)

- .html

- .txt

- .ppt

For this article, I have chosen the ‘Mark Down’ file. You can download the markdown from the attachment. Once selected the file, click on ‘Upload’.

Note: Please note that after the deployment of the cognitive search service, it is required to wait a minimum of 5 minutes to have the post configuration completed from the Azure side.

Points to Note

- Please plan on costs while deploying the services. You need to understand for some of the services, for instance, cognitive search, the standard price per month could incur up to $279 irrespective of being used or not. Also for creating custom models could also incur costs. These costs are based on storage. More about the costs for the Azure Open AI services can be found in the references section.

- For configuring the got35 model with training data, the cognitive search should be in at least the ‘Standard’ tier. Basic and free tiers are not supported.

Conclusion

We have followed the below steps to train the data, thus limiting the model to answer the questions and queries only from the training data using its own natural flow.

- Create your markdown file from the template that was attached in the article.

- Configure the Azure Cognitive search to create an index from the data that is stored in the training data container.

- Wait for the index to finish. Test the query in the UI screen in the Azure Cognitive Search module.

- Test the training data in the AI playground.

Clean Up

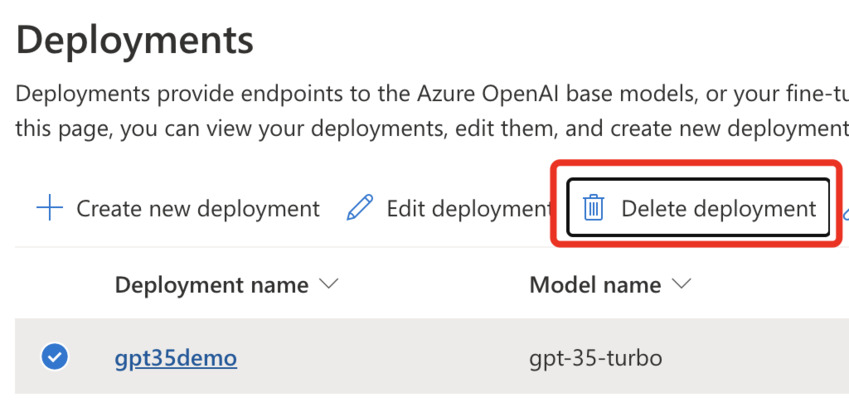

Step 1. At first, delete the custom deployment.

Please note that Open AI models incur a charge for hosting. Irrespective of the service being used or not, in this case, sending queries, the cost is still incurred. Proper planning is needed in Real-time scenarios to make sure the costs are not overpaid. At this point, there is no option to stop the service after a specific budget is reached, however, we can set up alerts based on subscription resource cost and take necessary action. More information about monitoring the costs and usage can be found in the references section.

Step 2. Delete the resource group that is configured for this task. In this case, it is teamsai-rg.

References

- https://learn.microsoft.com/en-us/azure/cognitive-services/openai/chatgpt-quickstart?tabs=command-line&pivots=programming-language-studio

- https://learn.microsoft.com/en-us/azure/cognitive-services/openai/how-to/chatgpt?pivots=programming-language-chat-completions

- https://learn.microsoft.com/en-us/azure/cognitive-services/openai/overview

- https://learn.microsoft.com/en-us/azure/ai-services/openai/how-to/manage-costs

Reference:

Ayinapurapu, V. (2023). How to Train Azure Open AI Models? Available at: https://www.c-sharpcorner.com/article/how-to-train-azure-open-ai-models/ [Accessed: 18th January 2024].