Introduction

Power BI users, hold on, the article will be relevant to you 🙂 Maybe 🙂

The relatively frequent question I receive at conferences and training on analytical services is: When to use Multidimensional, when to use Tabular (Power BI runs on Tabula).

There’s no quick answer (and maybe a separate blog post). One of the factors entering decision making is storage architecture.

Multidimensional holds data on disk, Tabular in memory. Ask yourself what your servers have more:

But is it really necessary to worry about the lack of memory? Tabular and Power BI compresses data into memory.

Among other things, how the compression in Vertipaq repository works I was talking on WUG Days and you can watch it here

https://www.wug.cz/zaznamy/466-WUG-Days-2018-Power-BI-Datove-modelovani-a-optimalizace

Specifically, the lecture’s description of the engine is based on a book by Albert Ferrari and Marc Russ:

Deffinitive Guide To DAX

( https://www.amazon.com/Definitive-Guide-DAX-intelligence-Microsoft/dp/073569835X )

There will be a second edition, wait for a complete overview.

The priorities of the Analysis Services development team are clear. There is a future in the Tabularu, Multidimensional is no longer developing

(we have not seen breakthrough news in Multidimensional since 2008) and support from client applications, especially Power BI, is lagging behind.

And that’s what makes me the most angry lately and reevaluating whether sticking to multidimensional is a good idea (and I’m a big fan).

Multidimensional was chosen for existing solutions in the company mainly with regard to function. Tabular did not meet all the functional requirements, but after a few years the situation is a bit different.

What I am waiting for in Multidimensional and starting to become a real pain are session level calculations at the report level for live connection. The pain is more.

I’ll make it a separate blog post in English, because one wise man told me about my MVP activities. “If you want to change something, it must reach the product team.”

So I wonder if I will write Czech, alternate languages, or go to English (to better influence product development). But this is another story.

In summary, I am starting to think strongly about redesigning an existing multidimensional solution at Tabular

(if my requests are not answered, nothing else is left for me).

But it will not be a day to remake something that has been created for about 4 years. So you can look forward to follow-up blog posts.

Main part

I have a multidimensional cube of about 11 measure groups, 27 dimensions. It takes up to 86 GB of disk space on MOLAP storage.

The first basic question is

If I wanted to redo the existing solution to Tabula, how much memory does the model take, in which I have the same data available?

I created a model containing all Measure Groups. And most of the dimensions.

I missed some of the little ones because the exercise would take too much time and the effect on size would be minimal anyway.

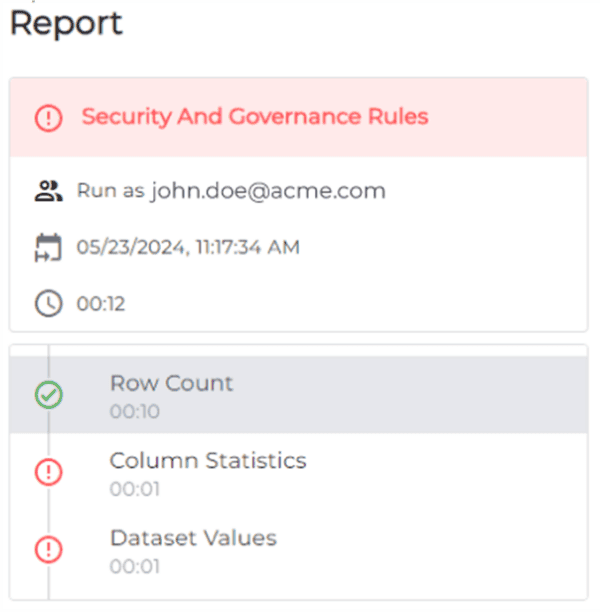

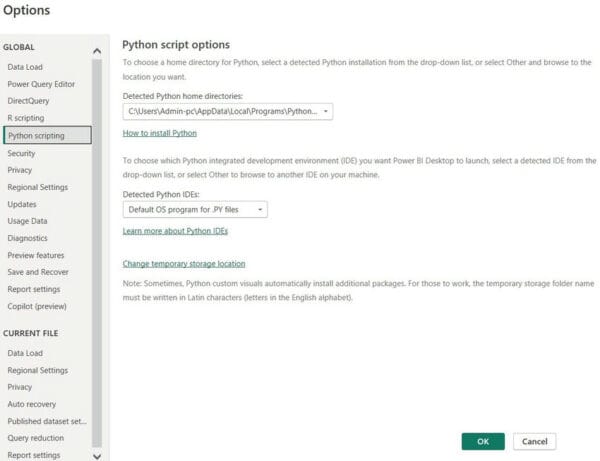

I used Vertipaq Analyzer from SQLBI to analyze the size ( https://www.sqlbi.com/ )

The tool can be downloaded here including tutorial. Basically it is a Power Pivot model built over the SSAS metadata. It can be used for both Tabula and Power BI analysis.

Just change the connection string to your SSAS and update the data.

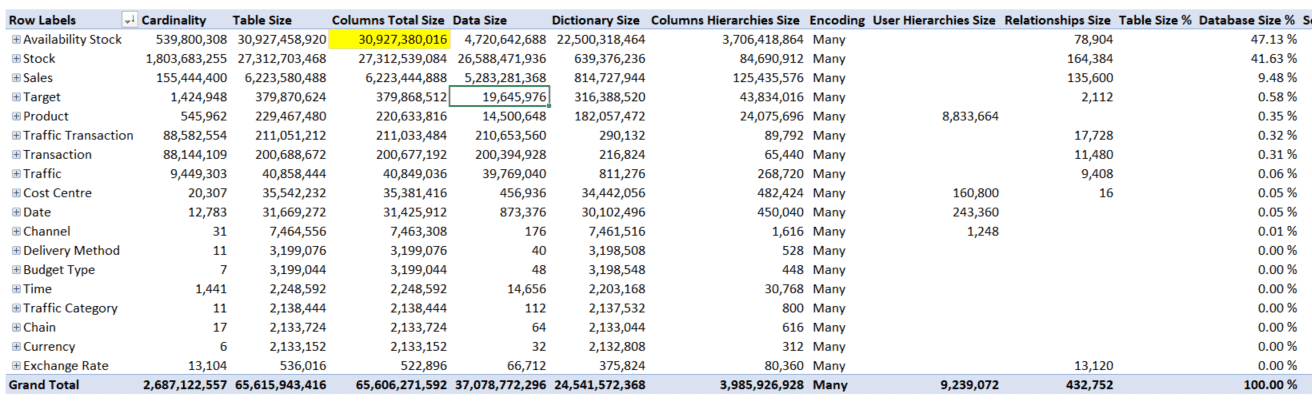

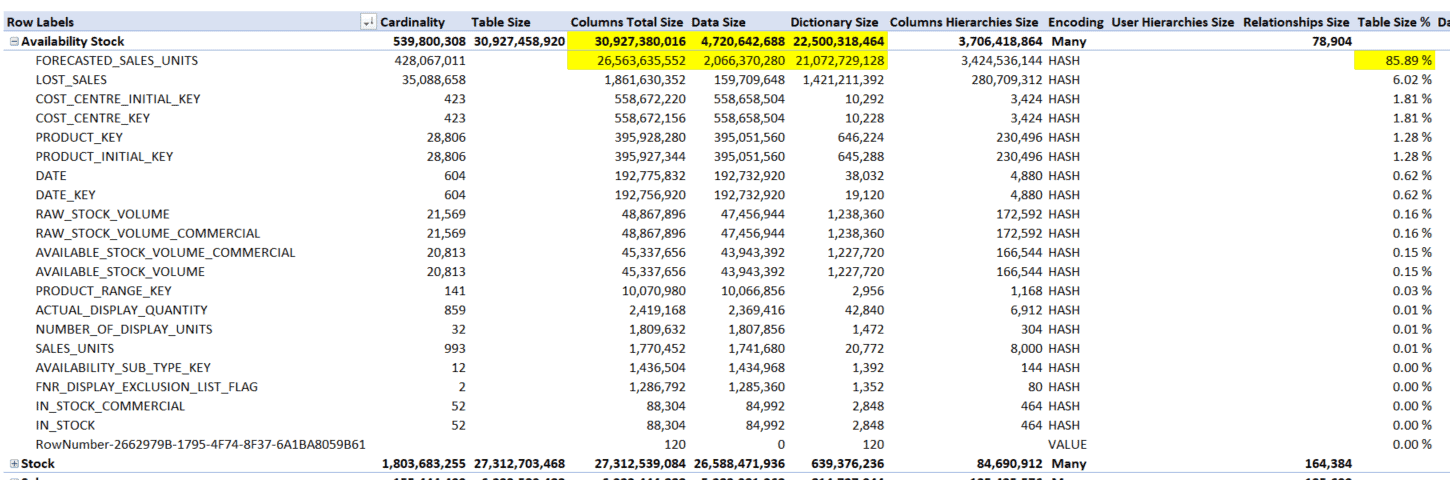

Measurement results without any optimization

Total database size 65.6GB without any optimization. Most places took the biggest two fact tables.

31 GB availability stock (539 million large table entries related to goods availability) and 27.3 GB stock

(1.8 billion large stock snapshot table).

The facts are in the last 3 fiscal years, so we now have about 2.5 years of data. We keep a longer history in the data warehouse. These two factual tables took 88.76% of the total size.

Meaning of selected columns from screenshot:

Cardinality – number of rows in the table, number of unique values in the column

Table size – Columns size + User Hierarchies Size + Relationship Size

Columns total size – Data size in columns (Data size, Dictionary size, Hierarchies Size)

Data size – size of detailed data

Dictionary size – the size of a dictionary related to compression details about compression can be found in the article from Albert and Marc here

It is therefore obvious from the previous screenshot that Availability stock occupies nearly 31GB and of which 22.5GB is a dictionary.

The table is smaller in number of rows but larger in consumed size in memory.

How about the individual columns?

Notice the yellow cells. Table 30.9 GB. Forecasted_sales_units column 26.5 GB. That is, 85.89%. Data in column 2GB, dictionary for data compression to 21GB.

This is a bit of disproportion and room for optimization.

As far as I know how compression works in tabula, I know that the size of the dictionary is determined by the data type. The data type affects cardinality.

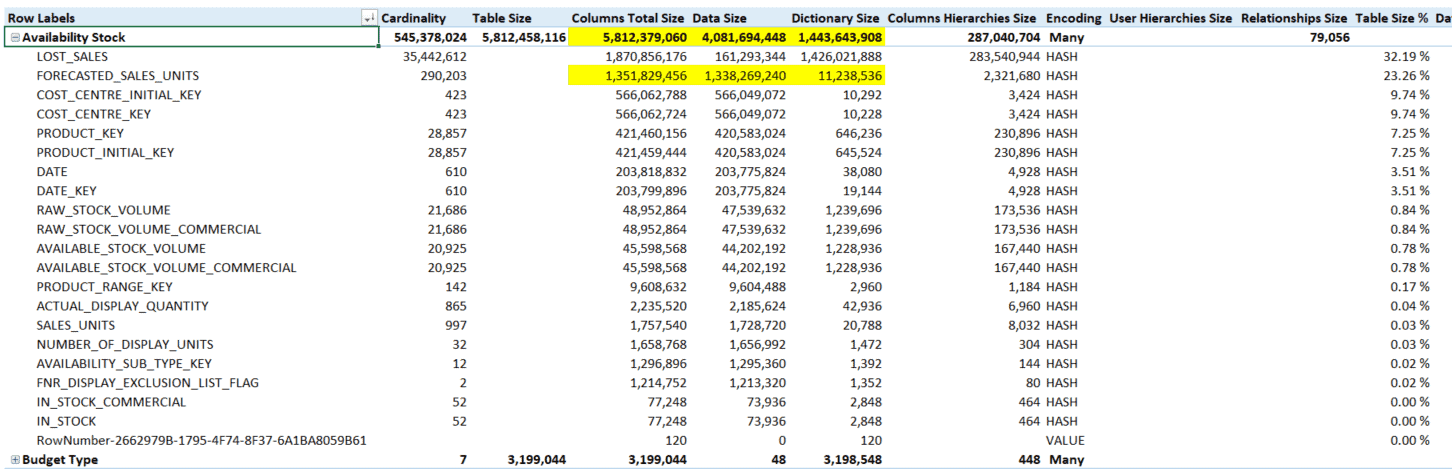

I check the data type at the column and see the float. Can I keep the model’s functionality out of touch with a sensitive data type change?

Decimal (19.4) might be enough. I will reload and look at the head.

Changing the data type hit the table size from 30.9 GB to 5.8 GB. The database got it from 65.6 GB to 40.5 GB.

I got specific column forecasted sales units from 26.5 GB to 1.3 GB. This is a decent change of data type for one column 🙂

Conclusion

How big a model will be in Power BI or Tabula cannot be predicted only based on the size of the input data.

It will depend on a number of factors, especially the ability of the vertipaq engine to compress data. This article should give you a small tutorial on how to analyze your used space.

As for the potential conversion of an existing multidimensional solution into a Tabula.

Answering the first question, the site problem will be. The place will not be a problem. Even the calculations for the basic metrics above the 1.8 billion records table were very decent.

But it is a long-distance run, and more questions need to be answered. Like:

Will it cover the entire functionality? Will it not go into the flowers, how do things get complicated by the complexity of business logic? About it sometime after 🙂

About the Author:

1.1.2017 I won the prestigious Microsoft MVP Data Platform

Reference:

Neoral, J. (2018). Tabular Power BI – Modern Size. Available at: http://www.neoral.cz/2018/12/tabular-power-bi-velikost-modelu.html [Accessed: 3rd May 2019].