The “how” isn’t important here (you’d need to buy me a drink to get it out of me!) – but what you need to know is this:

Late last year, I was faced with a situation where one of my customers attempted to do a Terraform deployment to their Production environment, only to find the Terraform state file was missing! Not only that, the entire Storage Account hosting the state file had been deleted, along with the Staging environment state file as well.

The Storage Account in question had 14 day soft delete enabled, however, this issue was discovered on the 15th day, and Microsoft confirmed there was no way to recover the data.

The Terraform state file had been permanently deleted, and the customer’s Production and Staging deployments were now blocked.

The Terraform state files for both Production and Staging needed to be manually recreated.

This is the story of how got out of this mess…

Rebuilding The Storage Account

The first thing I needed to do was create a new Storage Account in which to host the re-created state files.

It turns out the previous (now deleted) one had a very generic name (xxxxshared), so it really wasn’t obvious what it was used for. It also turns out that it didn’t have any resource locks to prevent accidental deletion, nor did it have any tags for further identification or metadata. The RBAC was somewhat suitable given the context, but clearly the data protection settings (14 day soft delete on blobs) were not.

The new Storage Account was given a name and tags by which it was VERY obvious what it was used for, and a resource lock was applied to prevent accidental deletion. The soft delete timeframe was extended, and snapshots were also enabled. The more data protection on this, the better!

Rebuilding The State File

The problem with the state file being deleted is that when you run a terraform plan, Terraform thinks that everything needs to be created from scratch, as if it were deploying to a clean environment.

When you try to run a terraform apply, Terraform throws a massive wobbly, complaining that resources exist in Azure, but not in state – which, to be fair, is totally accurate.COPYCOPYCOPYCOPY

Error: A resource with the ID "/subscriptions/xxxxxx/resourceGroups/rsg-uks-xxxxxx" already exists - to be managed via Terraform this resource needs to be imported into the State. Please see the resource documentation for "azurerm_resource_group" for more information.

The way to fix that is to run the terraform import command, where you pass in the Terraform resource ID and the corresponding Azure resource ID.

Thomas Thornton has an excellent blog post on this, here.

terraform import azurerm_resource_group.xxxxxx /subscriptions/xxxxxx/resourceGroups/rsg-uks-xxxxxx

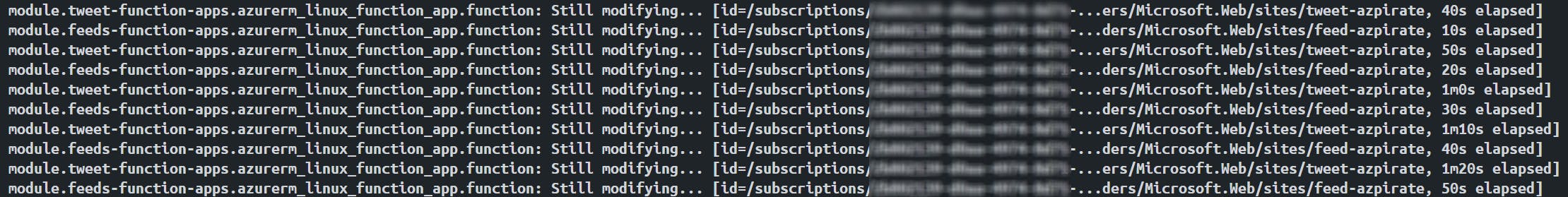

In itself, this is a fairly simple approach, however, this particular Terraform deployment was incredibly complex. It was partially modular, riddled with resource interdependencies, and it was huge, absolutely huge! For context, the state file for the development environment was 58,176 line long! I don’t recall the number of Azure resources that were deployed, but there were a lot of them, in one big Terraform deployment.

This presented a rather large challenge when coming to run the terraform import commands (which I would need to do per resource). Firstly, how on earth was I going to get a list of every single Terraform resource, and then how would I get the corresponding Azure resource ID. Given the size and complexity of the Terraform deployment, this was going to be a challenge.

Make the most out of Azure Week. Find out more.

My saving grace is that I was able to get hold of the pipeline logs from the previous deployment to Staging.

From there, I was able to extract the Terraform terraform apply output, and from that I could parse the file (using PowerShell and some manual tweaking) to come up with a list of both Terraform and Azure resource IDs. The output of this was a whopping big script of multiple terraform import commands that I could run to rebuild the Staging state file.

For a reason I can no longer recall, the terraform apply output was all I could get access to at this time. I think it was down to the plan being output to file and saved as a build artifact, which was eventually removed.

With Production however, I was not so lucky. The Staging deployment was done recently in readiness to eventually deploy to Production. The most recent Production deployment logs were long gone due to a retention policy,

This meant that Staging was a version ahead of Prod. Therefore, there were infra differences between the two environments, so I couldn’t just duplicate the Staging state file and do a search and replace on the subscription and resource names.

To get around this, I had to repeatedly run terraform import followed by terraform plan to capture the Terraform resource IDs, and manually match them to the Azure resource IDs. Updating as I go for anything the plan output flagged as being missing. Thankfully, several of the resource IDs could be pinched from the Staging work I did earlier, and repeatedly running terraform import followed by terraform plan allowed me to fill in the gaps. In was a painstaking process.

Deployment Issues

I discovered some resources simply could not be imported back into state.

resource "random_uuid" "ruuid" {}

resource "azurerm_storage_account" "xxx" {

name = substr(replace("xxx${random_uuid.ruuid.result}", "-", ""), 0, 23)

resource_group_name = var.rsg_name

location = var.location_name

account_tier = "Standard"

account_replication_type = "LRS"

enable_https_traffic_only = true

min_tls_version = "TLS1_2"

tags = jsondecode(var.environment_tag)

blob_properties {

versioning_enabled = true

}

}

With the above, the randomly generated UUID existed in state as its own entity. This would be something like aabbccdd-eeff-0011-2233-445566778899. The Storage Account name consisted of only part of this UUID, and that depended on the friendly name that was prepended to it. With the above example, the Storage account would be called: xxxaabbccddeeff00112233, but obviously this differed between Storage Accounts.

As I didn’t know the original full UUIDs, there was no way I could import it back into state.

To get around this, I got a bit hacky by taking the UUID part of every existing storage account, and converting that into a UUID by adding a shed load of random numbers, being careful to add the hyphens in the right place. For example:

terraform import random_uuid.main aabbccdd-eeff-0011-2233-666666666666

Needless to say, it was a hacky faff, but it worked.

Another fairly critical issue I faced was with RBAC role assignments. For example:

resource "azurerm_role_assignment" "example" {

scope = data.azurerm_subscription.primary.id

role_definition_name = "Reader"

principal_id = data.azurerm_client_config.example.object_id

}

These were numerous and existing in multiple different modules. I was able to get the Terraform resource IDs easy enough, but the corresponding Azure resource ID proved to be a nightmare, as they exist as GUIDs.

For example:

terraform import azurerm_role_assignment.example /subscriptions/00000000-0000-0000-0000-000000000000/providers/Microsoft.Authorization/roleAssignments/00000000-0000-0000-0000-000000000000

I was able to run the PowerShell command Get-AzRoleAssignment (source) to pull the IDs from Azure, but the problem here was the sheer amount of these to identify. It wasn’t just a case of user IDs on a subscription or resource group, oooh no, there were also many, many inter-resource role assignments, for example a Function App Managed Identity with an RBAC role of “Azure Service Bus Data Sender” applied to an Azure Service Bus.

I was able to script this in PowerShell to pull a great big list of every single role assignment, and then did a lot of searching of the Terraform config to match the IDs.

Even with this in place, the terraform import command still failed on some, but not all, role assignments. Either I’d made mistakes when matching the IDs, or something else under the hood with Azure.

Ultimately, due to the complexity and time pressures, we had to manually delete some role assignments and let Terraform recreate them on the next apply.

Deployment Prep

Before the Production terraform apply was run following the state file recreation, I made a point of taking manual backups of the Key Vault secrets, App Config contents and ensuring the databases had good enough backups. Anything that was deemed critical and could potentially have been impacted by the deployment was backed up.

I mention this here should you be reading this article faced with a similar situation. May this be your prompt to make backups and be prepared for the worst!

Lessons Learned

As I’m sure you can imagine, this was a stressful time for both myself and my customer. The workarounds mentioned above were complex and far from ideal. Ultimately, the problem was resolved once the state files had been recreated, and terraform plan and apply jobs had been run against it.

If you’re ever in this position, then I really feel for you, and may this article act as a reference on how I got out of it.

For anyone reading this, I would strongly suggest following the below Lessons Learned to hopefully avoid landing yourself in a similar situation. If you can think of any more, let me know!

- When saving remote state in an Azure Storage Account:

- Give the Storage Account a suitable, easily identifiable name.

- Apply adequate data protection, such as soft delete and snapshots.

- Apply adequate access control, such as RBAC, ABAC and network restrictions where appropriate.

- Apply tags.

- Apply a resource lock.

- In my customer example, we deployed dedicated Storage Accounts to contain the state files for both Staging and Production. Both with the above points applied.

- Make backups of your Terraform plan and apply outputs. For example, add a pipeline step to save them to blob storage (with blob retention policies applied).

- Terraform resources such as role assignments and RUUIDs are very difficult to recreate in state. Review the documentation carefully to fully understand their application.

- Run Terraform Apply very carefully, especially when testing fixes. Don’t use auto approve in this context, and carefully review the Terraform Plan outputs.

- Have adequate documentation, ideally with diagrams. Knowledge is power in this scenario.

- If you don’t have one already, have a disaster recovery plan, and consider adding a scenario such as this to it. For example, if you have to go through something such as this, can you get access to your Terraform variable file contents and environment variables (for example GitHub secrets)? Can you run a deployment locally if you really had to? Proper Planning and Preparation Prevents P!ss Poor Performance!

- Follow Terraform best practice. My personal advice would be to use a modular approach and have multiple smaller deployments (by component lifecycle for example) rather than one large, complex deployment (and therefore one large, complex state file). Having said that, there isn’t a one-size-fits approach, so do what’s right for your given scenario. Checkout Terraform Recommended Practices for more detail.

About the Author

A Microsoft Most Valuable Professional (MVP) in Azure that writes about Azure, Automation and DevOps.

Reference

McLoughlin, D., 2023, Recovering From A Deleted Terraform State File, Clouddevdan.co.uk, Available at: https://clouddevdan.co.uk/recovering-from-a-deleted-terraform-state-file [Accessed on 5 July 2023]