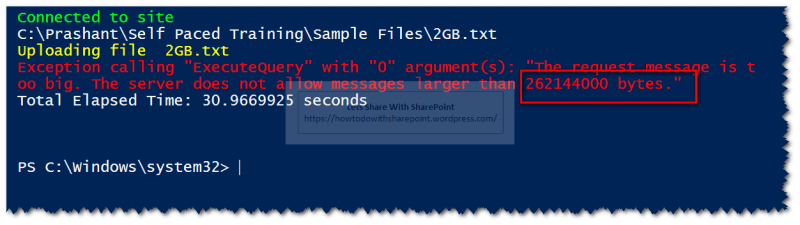

Uploading large files to SharePoint On-Premise or Online is an obvious problem during data migration from any external systems like Lotus Notes.

Here is one of such errors which we might encounter while trying to upload a file of size greater than 250 MB-

Connected to site

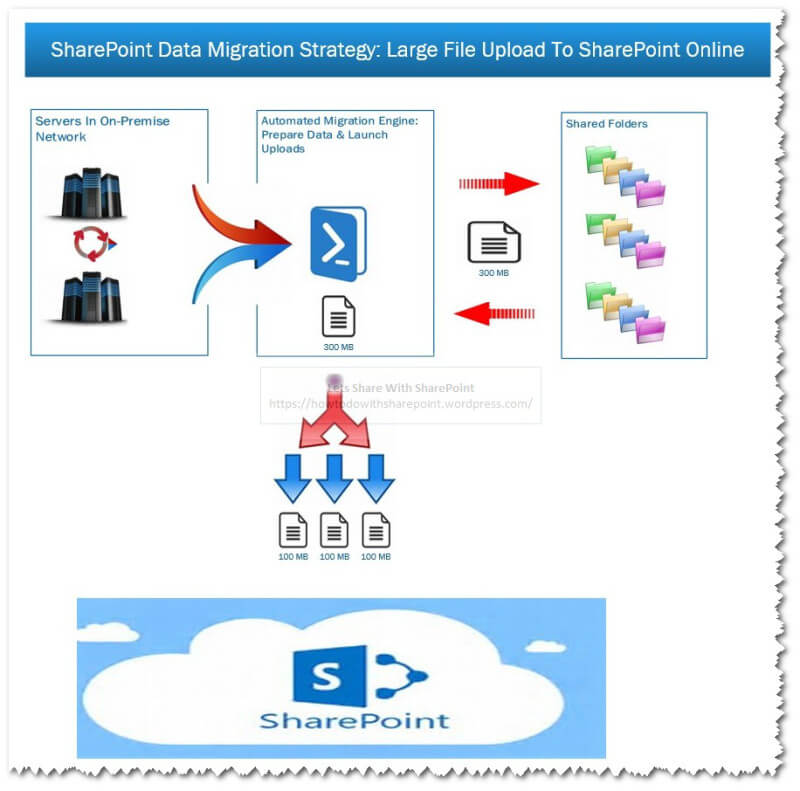

In this article, I will explain a data upload strategy where we can split a large file into multiple chunks of a smaller size.

Solution Architecture Diagram

For better understanding, we can refer to the following solution architecture diagram-

SharePoint Data Migration Strategy

Based on this diagram we can conclude the following facts,

- This solution can be hosted on multiple servers to launch parallel uploads

- This solution can consume data from Network File Shares

- Once data file is retrieved (say of size 300 MB), this solution will split the file (100 MB) automatically based on the pre-configured chunk size (which should not exceed the size limit of 250 MB)

- Each chunk then appended to the file uploaded in multiple iterations

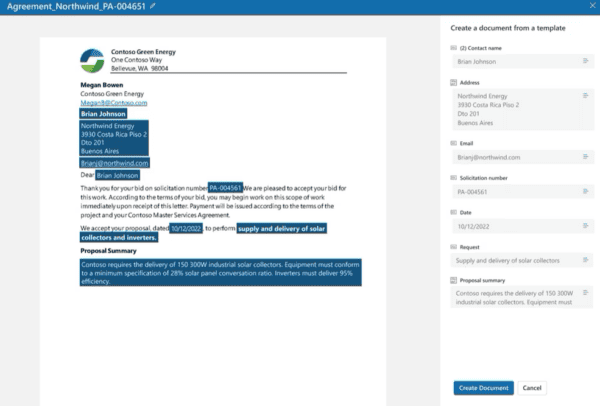

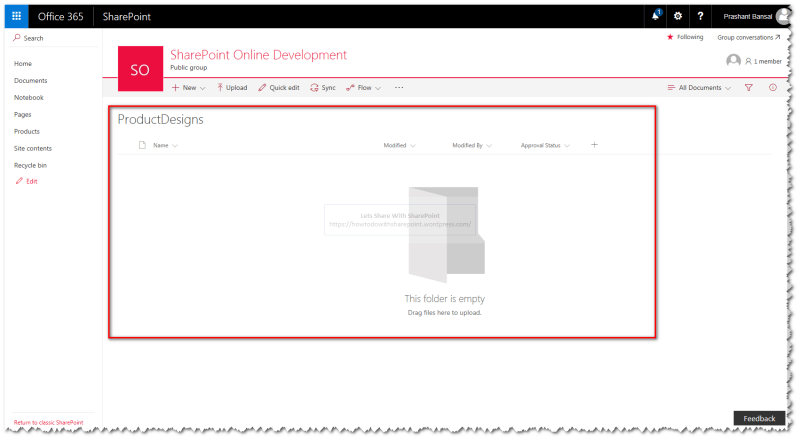

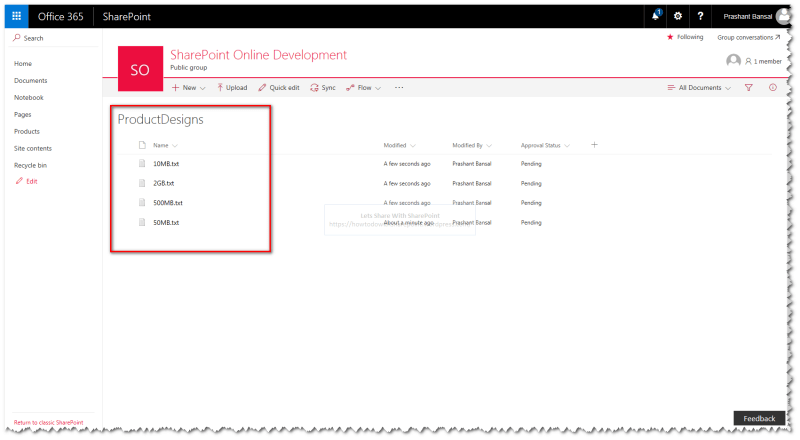

In order to start with this demo, we would need a SharePoint Document Library in SharePoint Online (or On-Premise) Site as shown below,

SharePoint Online Development

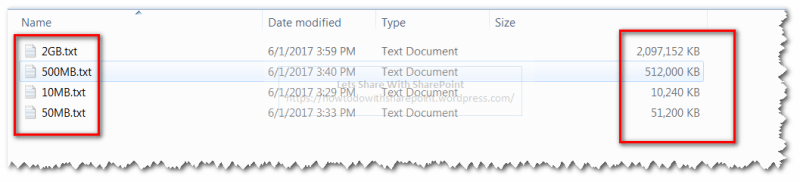

Another prerequisite to demo is to have files of various sizes that we can use to upload to the document library.

I made use of following command line utility to generate files of various sizes. This utility takes destination folder path and size of the file in KBs as input.

Here is the usage example of the command line utility.

fsutil file createnew "C:\Prashant\Self Paced Training\Sample Files\2GB.txt" 2147483648

Similarly, I have generated other files too, as shown below.

fsutil file createnew “C:\Prashant\Self Paced Training\Sample Files\2GB.txt” 2147483648

Now, let’s dive down into the code to understand the actual implementation.

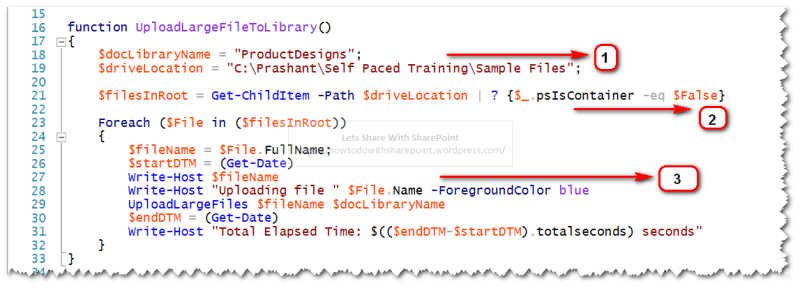

Step 1

Declare a variable to hold the document library name & folder path. For production use, I recommend having these values in an external configuration file.

Step 2

Reading files from the folder specified in path variable in Step 1.

Step 3

Loop through all the files and pass each file to the “UploadLargeFiles” function along with the document library name.

“UploadLargeFiles”

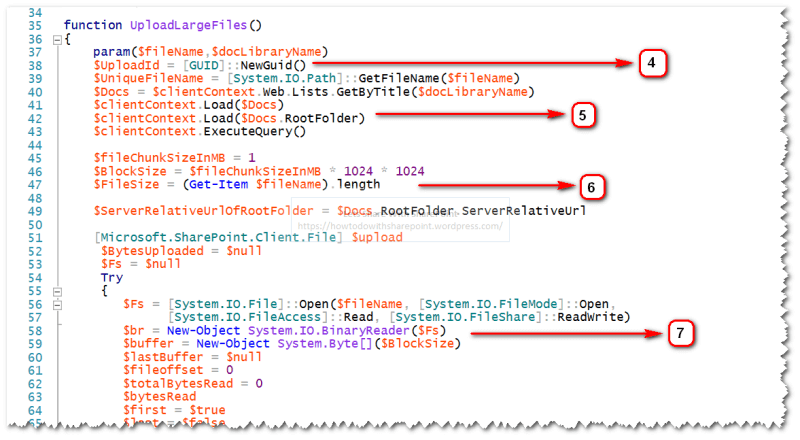

Step 4

Generate unique upload id & get file name of the file to be uploaded.

Step 5

Get the handle on document library object and load the root folder (or any target folder) within the document library.

Step 6

Calculate the block size to be uploaded and total file size (as shown in the architecture diagram).

Step 7

Read the bytes from the source file and set the read buffer based on the block size.

Read the bites and set the read buffer

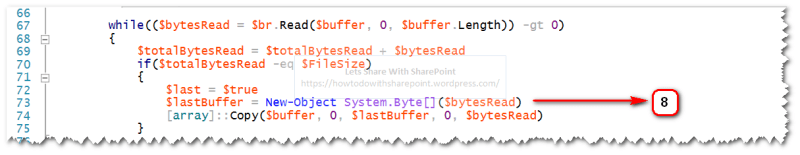

Step 8

Read the bytes based on the buffer limit

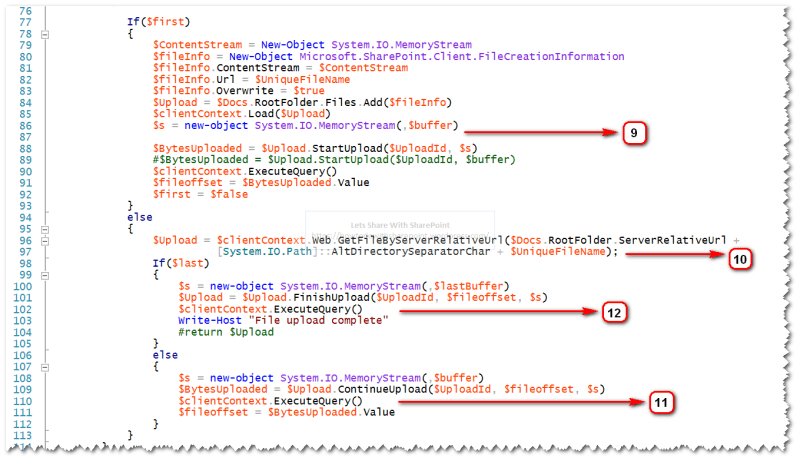

Step 9

Check if this is the first chunk that is being uploaded, if yes then add a new file to SharePoint Document Library, get the file content based on the buffer size for the chunk and call “StartUpload” function that is defined under “Microsoft.SharePoint.Client.File” class. This will add the file to the document library but with a small bunch of content only.

Step 10

Check if this is not the first chunk that is being uploaded, if yes then find the file in document library and get the handle on it

Step 11

If this is another chunk of data which is not the last chunk, this chunk will be appended to the same file by using “ContinueUpload” function that is defined under “Microsoft.SharePoint.Client.File” class. This will append the content to the file identified by Upload Id that we have initialized in earlier steps.

Step 12

If this is the last chunk of data, this chunk will be appended to the same file by using “FinishUpload” function that is defined under “Microsoft.SharePoint.Client.File” class. This will append the content to the file identified by Upload Id that we have initialized in earlier steps and commits the changes to the file. Once this function completes successfully the changes will be made persistent to the file.

“FinishUpload” function

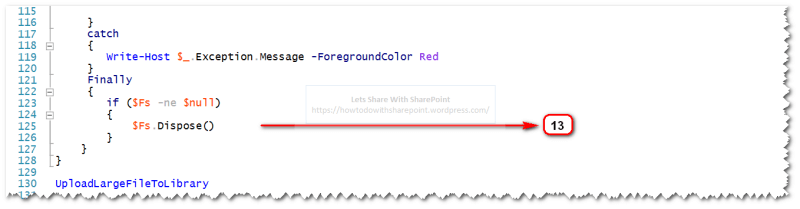

Step 13

Perform exception handling and call the “UploadLargeFileToLibrary”

“UploadLargeFileToLibrary”

I recommend reading the documentation on Microsoft.SharePoint.Client.File class and understand functions carefully before using it.

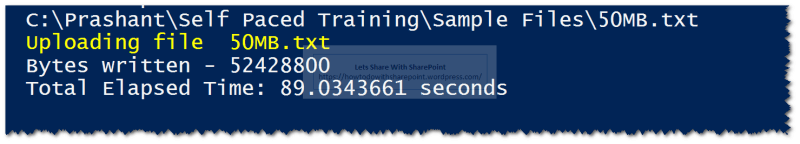

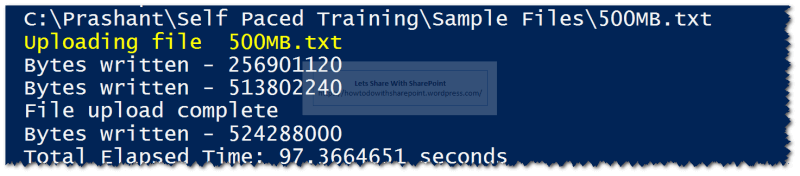

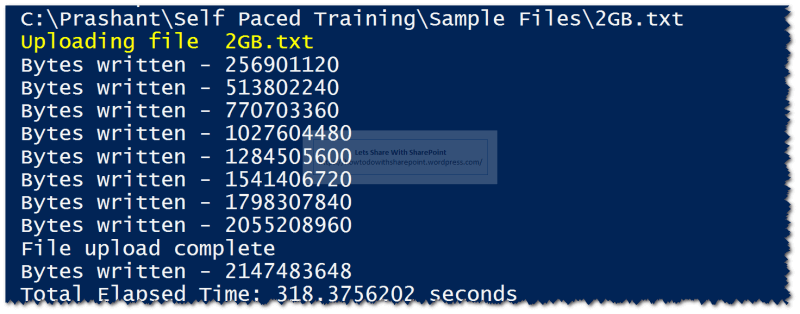

Once we execute this script we can see the following information,

- File Name to be uploaded

- Chunk size

- Total Time taken to upload the files

It is important to note that total time taken to upload the files may vary depending on the hosting environment.

File Size to be uploaded: 10 MB

10MB

File Size to be uploaded: 50 MB

50MB

File Size to be uploaded: 500 MB

500GB

File Size to be uploaded: 2 GB

2GB

Once the script is executed successfully we can see the respective files uploaded to the SharePoint Online Site as shown below,

Product Designs

Here is the complete code.

function UploadLargeFileToLibrary() {

$docLibraryName = "ProductsDocuments";

$driveLocation = "C:\Prashant\Documents to Upload";

$filesInRoot = Get - ChildItem - Path $driveLocation | ? {

$_.psIsContainer - eq $False

}

Foreach($File in ($filesInRoot)) {

$fileName = "C:\Prashant\Documents to Upload\$($File.Name)";

$startDTM = (Get - Date)

Write - Host $fileName

Write - Host "Uploading file "

$File.Name - ForegroundColor blue

UploadLargeFiles $fileName $docLibraryName

$endDTM = (Get - Date)

Write - Host "Total Elapsed Time: $(($endDTM-$startDTM).totalseconds) seconds"

}

}

function UploadLargeFiles() {

param($fileName, $docLibraryName)# $fileName = "C:\Prashant\Documents to Upload\1gb.txt";

$UploadId = [GUID]::NewGuid()

$UniqueFileName = [System.IO.Path]::GetFileName($fileName)

$Docs = $clientContext.Web.Lists.GetByTitle($docLibraryName)

$clientContext.Load($Docs)

$clientContext.Load($Docs.RootFolder)

$clientContext.ExecuteQuery()

$fileChunkSizeInMB = 1

$BlockSize = $fileChunkSizeInMB * 1024 * 1024

$FileSize = (Get - Item $fileName).length

$ServerRelativeUrlOfRootFolder = $Docs.RootFolder.ServerRelativeUrl[Microsoft.SharePoint.Client.File] $upload

$BytesUploaded = $null

$Fs = $null

Try {

$Fs = [System.IO.File]::Open($fileName, [System.IO.FileMode]::Open, [System.IO.FileAccess]::Read, [System.IO.FileShare]::ReadWrite)

$br = New - Object System.IO.BinaryReader($Fs)

$buffer = New - Object System.Byte[]($BlockSize)

$lastBuffer = $null

$fileoffset = 0

$totalBytesRead = 0

$bytesRead

$first = $true

$last = $false

while (($bytesRead = $br.Read($buffer, 0, $buffer.Length)) - gt 0) {

$totalBytesRead = $totalBytesRead + $bytesRead

if ($totalBytesRead - eq $FileSize) {

$last = $true

$lastBuffer = New - Object System.Byte[]($bytesRead)[array]::Copy($buffer, 0, $lastBuffer, 0, $bytesRead)

}

If($first) {

$ContentStream = New - Object System.IO.MemoryStream

$fileInfo = New - Object Microsoft.SharePoint.Client.FileCreationInformation

$fileInfo.ContentStream = $ContentStream

$fileInfo.Url = $UniqueFileName

$fileInfo.Overwrite = $true

$Upload = $Docs.RootFolder.Files.Add($fileInfo)

$clientContext.Load($Upload)# $s = [System.IO.MemoryStream]::new($buffer)

$s = new - object System.IO.MemoryStream(, $buffer)

$BytesUploaded = $Upload.StartUpload($UploadId, $s)# $BytesUploaded = $Upload.StartUpload($UploadId, $buffer)

$clientContext.ExecuteQuery()

$fileoffset = $BytesUploaded.Value

$first = $false

}

else {

$Upload = $clientContext.Web.GetFileByServerRelativeUrl($Docs.RootFolder.ServerRelativeUrl + [System.IO.Path]::AltDirectorySeparatorChar + $UniqueFileName);

If($last) {#

$s = [System.IO.MemoryStream]::new(, $lastBuffer)

$s = new - object System.IO.MemoryStream(, $lastBuffer)

$Upload = $Upload.FinishUpload($UploadId, $fileoffset, $s)

$clientContext.ExecuteQuery()

Write - Host "File upload complete"#

return $Upload

}

else {#

$s = [System.IO.MemoryStream]::new(, $buffer)

$s = new - object System.IO.MemoryStream(, $buffer)

$BytesUploaded = $Upload.ContinueUpload($UploadId, $fileoffset, $s)

$clientContext.ExecuteQuery()

$fileoffset = $BytesUploaded.Value

}

}

}

} catch {

Write - Host $_.Exception.Message - ForegroundColor Red

}

Finally {

if ($Fs - ne $null) {

$Fs.Dispose()

}

}

}

Hope you find it helpful.

About the Author:

Prashant Bansal has over 12 years of extensive experience in Analysis, Design, Development, Testing, Deployment, & Documentation using various Microsoft technologies with excellent communication skills, problem solving and inter-personal skills with an avid interest in learning and adapting to new technologies.

Professional Certifications:

Microsoft Certifications: Microsoft Certified Solution Associate, Microsoft Certified Solution Developer, SharePoint 2013 Solutions & Apps, Microsoft Specialist, Programming in HTML5 with JavaScript and CSS, Microsoft Certified Professional Developer (MCPD), Designing and Developing Microsoft SharePoint 2010 Applications, Microsoft Certified Technology Specialist (MCTS), SharePoint 2010, Application Development, WCF Application Development, SQL Server 2005, Microsoft Certified Application Developer (MCAD.Net) and Microsoft Certified Professional (MCP)

Software Practices Certifications: Agile Overview Level-1 and Agile Overview Level-2.

Reference:

Bansal, P (2018). SharePoint Online/2016/2013 – How To Upload Large Files Using PowerShell Automation. Available at: https://www.c-sharpcorner.com/article/sharepoint-online20162013-how-to-upload-large-files-using-powershell-automati/ [Accessed 11 July 2018]